Final report for GNC22-354

Project Information

Dairy cows are particularly sensitive to heat stress, which can affect profitability due to reduced milk production and reproductive performance, and significantly deteriorate animal welfare. Climate change is likely to increase average temperatures and the frequency of severe heat waves across the US, and recent research suggests that the North Central Region is not an exception to this near-future crisis. The increasing pressure of heat stress demands more active methods of cooling, such as strong ventilation fans and spray water, which use large amounts of electricity and water. The rising cost of electricity and changes in rainfall patterns make it more challenging to maintain the profitability and sustainability of the dairy industry under severe heat stress.

For the optimal mitigation of heat stress, it is crucial to detect the signs of heat stress early before it makes long-term impacts. Traditionally, farmers have used dairy cattle’s individual behavior–how they move, how much they eat and drink, where they spend time, etc.–and herd behavior–how they interact with each other–to indirectly gauge the level of heat stress and take necessary mitigation measures. However, it is becoming less viable as more farms are consolidated and the number of cows managed per farmer rapidly grows.

This research proposes to use computer vision and artificial intelligence (AI) for early detection of heat stress in dairy cattle for more sustainable and profitable dairy farming in the North Central Region. We use state-of-the-art computer vision and AI technologies to monitor and analyze dairy cattle’s individual and herd behavior to help farmers and ranchers make optimal decisions to mitigate heat stress in a timely manner.

This research project introduces a comprehensive approach to precise and personalized cattle monitoring using AI and computer vision for early heat stress prediction.

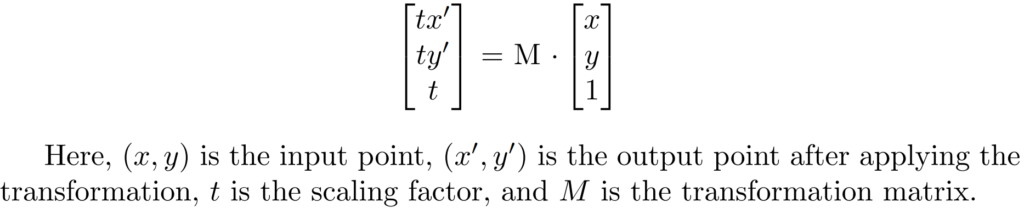

Figure 1 illustrates the proposed methodology. Images/video frames from the cameras installed inside the barn are fed into a computer vision system. It comprises of machine learning (ML) models for cattle detection, individual identification, and activity recognition. The output generated from our computer vision system serves as a crucial source of information, offering valuable indicators closely linked to the occurrence of heat stress in dairy cattle. This personalized and precise data empowers farmers with actionable insights, enabling them to make well-informed and data-driven decisions in a timely manner. This promotes sustainable livestock management and animal welfare.

The outcomes of the proposed research will be disseminated to local farmers through our outreach and extension network. For more wide-spread adoption, we will open-source the software outcomes of the research, including collected and labeled datasets, trained computer vision models, source code, and documentation for training and inference.

Learning outcomes:

Farmers/ranchers will learn how AI can be leveraged for early prediction of heat stress in dairy cattle. They will learn about the changes in behavior in cattle which can be early indicators of heat stress and how automation in the task of behavior analysis using AI can be used in heat stress prediction. It will make them knowledgeable in performing data-driven decision-making to maximize profitability with minimal resources. The project will spread awareness among farmers and ranchers about reducing energy and water consumption through precision livestock and efficient cooling mechanisms. They will learn how they can play a central role in reducing energy and water footprint of a barn leading to sustainable livestock practices.

Researchers can use the dataset collected and labeled as a part of this project and the proposed methodologies to advance their research in the domains of heat stress mitigation, animal welfare, and sustainable agriculture using AI.

Action outcomes:

Farmers/ranchers will use the outputs of the project to mitigate financial losses incurred due to heat stress in cattle using efficient cooling techniques, for continuous monitoring of activities and location of the cattle, and to improve animal welfare. Apart from the direct utility for farmers/ranchers, the output of the project can also be used by engineers and researchers to design energy-efficient, optimal, and cost-effective cooling mechanisms to mitigate heat stress in dairy cattle. Eventually, the outputs of this project will also encourage farmers to move toward more energy-efficient and precise heat stress abatement techniques, contributing to sustainable livestock practices.

The results obtained from our methodology demonstrate its effectiveness in cattle monitoring within a barn environment. The robust performance in cattle detection, individual cow identification, and activity recognition attest to the method's practical applicability in enhancing animal welfare and farm management. Accurate cattle detection forms the foundation for subsequent monitoring phases, while individual cow identification enables personalized monitoring. The integration of these components culminates in a comprehensive understanding of cattle behavior and activity, contributing to improved decision-making. Furthermore, the integration of data from multiple cameras within the multi-camera setup allows for a broader field of view, enhancing the precision and comprehensiveness of cattle monitoring. The results and insights obtained from our methodology demonstrate a significant step towards the development of an advanced cattle monitoring system. It holds promise for a range of applications, from early heat stress prediction to optimizing farm management practices. The robustness of our approach, as evidenced by the quantitative results, underscores its potential to enhance animal welfare, increase farm productivity, and contribute to the overall sustainability of dairy farming operations.

The primary objectives of this project are as follows:

-

-

Cattle Detection: In order to perform behavior recognition and prediction of heat stress, it is imperative to first detect the individual cows in an image or video frame. We employ the YOLOv7 (You Only Look Once) object detection framework to accurately locate and track cattle within the barn. It is a state-of-the-art real-time object detector in terms of both speed and accuracy, making it a suitable choice for monitoring livestock.

-

Individual Identification of cows: This objective of individual cow identification provides not only accurate detection of cattle but also the ability to differentiate individual cows within the herd, enabling personalized monitoring of the cows. Our approach includes a deep learning-based classifier that is capable of recognizing individual cattle within the barn. This is essential for tracking the behavior and health of each cow, facilitating personalized care and monitoring.

-

Activity Classification: Understanding the activity of cattle is crucial for assessing their health and well-being. The utilization of the activity recognition model not only enhances our understanding of individual cattle behavior but also provides valuable insights into the collective dynamics of the herd. We employ a convolutional neural network-based classifier to distinguish between two key activities: "standing" and "lying." This classification provides insights into the behavior and comfort of the cattle.

-

Generation of a Comprehensive 2D Barn Map: This map serves as a valuable tool for farm management, allowing for the efficient monitoring of each cow's location, identification, and activity status. By applying a linear perspective transformation, we convert the isometric view of the barn into a top-down view, enabling the display of cattle positions, individual IDs, and activity status on the map.

-

Data collection: Good quality data is the key enabler of modern AI algorithms. One of the important research objectives of this project is to collect and label a high-quality labeled dataset for the tasks of cattle detection, identification, and heat stress prediction.

-

Research

Overview

Computer vision is a field of artificial intelligence (AI) that focuses on enabling computers to interpret and understand visual information from images or videos. It allows them to extract meaningful insights through tasks such as object detection and video-based decision-making. A typical computer vision system uses the camera as an input device, which captures visual information in the form of images/videos frames. This input is fed into a machine learning model which is trained using datasets for specific tasks such as identifying and locating objects in an image. The model produces output that provides a visual understanding of the input image or video, which can further be used for various applications.

Recent advances in machine learning applied to visual recognition tasks have enabled the use of computer vision systems in real-world scenarios in various domains, including agriculture and dairy industries. Modern livestock management has evolved significantly in recent years, with a growing emphasis on precision agriculture and the integration of advanced technologies to enhance the well-being of animals and the efficiency of farming operations. In this context, the development of intelligent systems for monitoring and data-driven decision-making has become increasingly vital in the livestock industry.

This research project introduces a comprehensive approach to precise and personalized cattle monitoring using AI and computer vision for early heat stress prediction. We develop a computer vision system that processes images/videos obtained from a camera installed in a barn, yielding the following outcomes:

- Detection and identification of individual cows in image/video frame.

- Mapping of individual cow’s locations onto the ground plane, compatible with both single-camera and multi-camera configurations.

- Activity recognition of individual cows, such as standing and lying.

- A comprehensive 2D Barn Map with information on individual cows’ location and activities.

Figure 1 illustrates the proposed methodology. Images/video frames from the cameras installed inside the barn are fed into a computer vision system. It comprises of machine learning (ML) models for cattle detection, individual identification, and activity recognition. The output generated from our computer vision system serves as a crucial source of information, offering valuable indicators closely linked to the occurrence of heat stress in dairy cattle. This personalized and precise data empowers farmers with actionable insights, enabling them to make well-informed and data-driven decisions in a timely manner. This promotes sustainable livestock management and animal welfare.

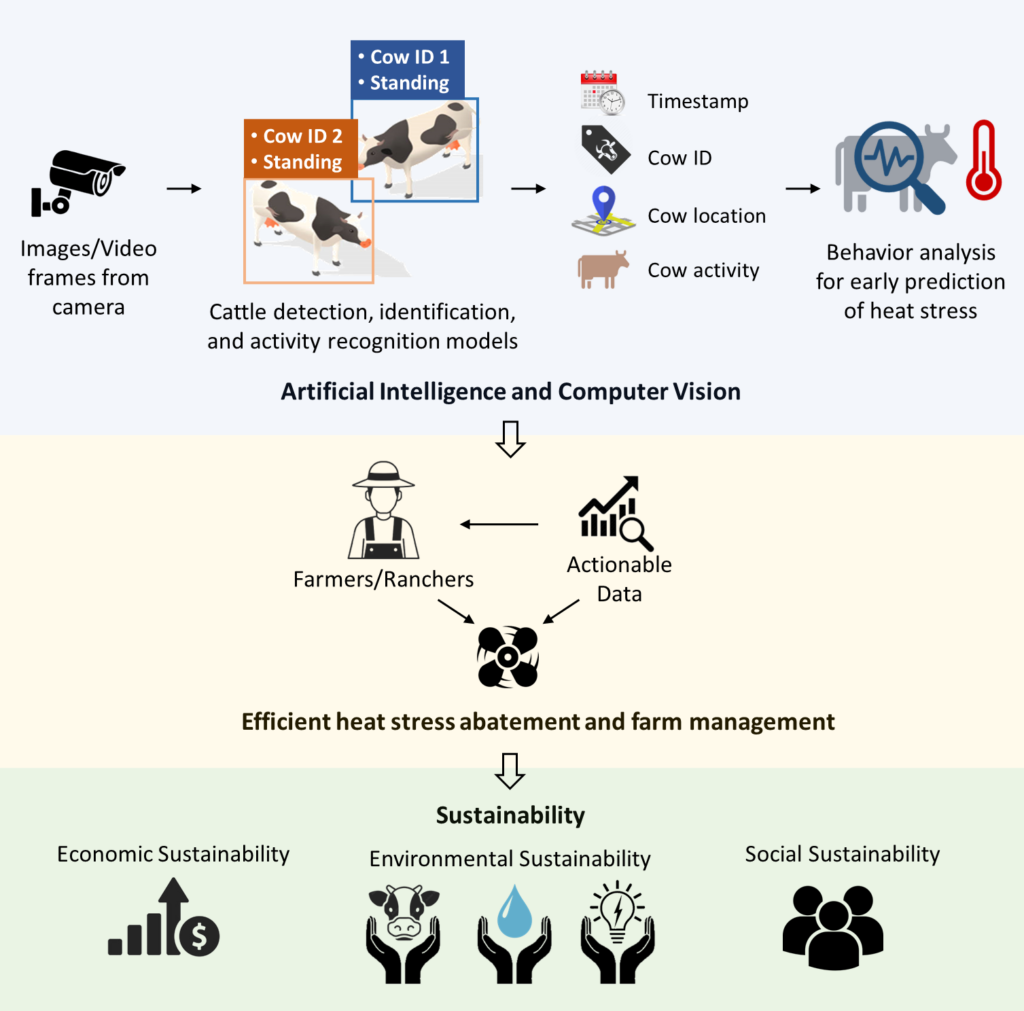

We now describe our computer vision system in detail. Figure 2 illustrates the design of our proposed methodology. The proposed computer vision system integrates cow detection, identification, and activity recognition machine learning models followed by a geometric transformation to produce a 2D map of the cow locations.

The steps involved in this methodology are discussed in detail below:

Cow Detection

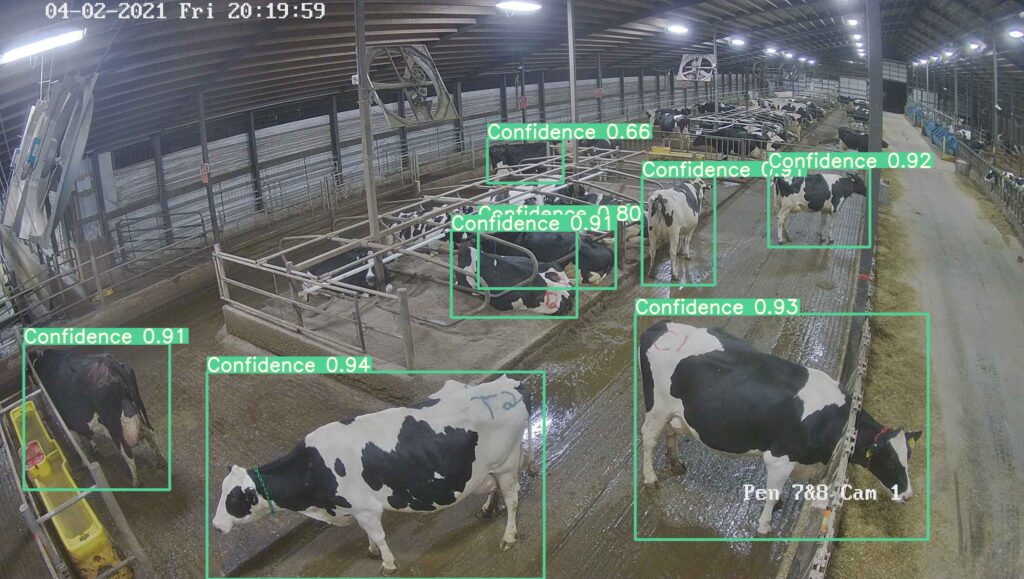

The initial step of our approach involves the detection of cattle in the input images/video using a deep learning-based object detection model as shown in Figure 2 (a). An object detection model outputs (i) a set of bounding boxes that enclose the objects of interest (ii) an object label associated with every detected bounding box (e.g. ‘cow’) denoting what the object is (iii) confidence score associated with each bounding box.

A labeled dataset is required to train an object detector. Each image is annotated with the bounding boxes for the cows present in an image. Bounding box annotations in an object detection dataset are a form of metadata used to define the location and extent of specific objects within images or frames of a video. They consist of rectangles enclosing the objects of interest (e.g. cows) in the images or frames. The rectangle is typically defined using the (x, y) coordinates of the top-left and bottom-right corner of the bounding box. Each bounding box is associated with a specific class label, describing the object it encloses. These class labels are used to identify and categorize the objects present in the image. The annotations are used as ground truth data during the training of our cattle detection model. The model learns to predict bounding boxes and their corresponding class labels for objects within new, unseen images. When the model predicts bounding boxes on test or validation data, the annotations serve as a reference to measure the performance of the model's predictions.

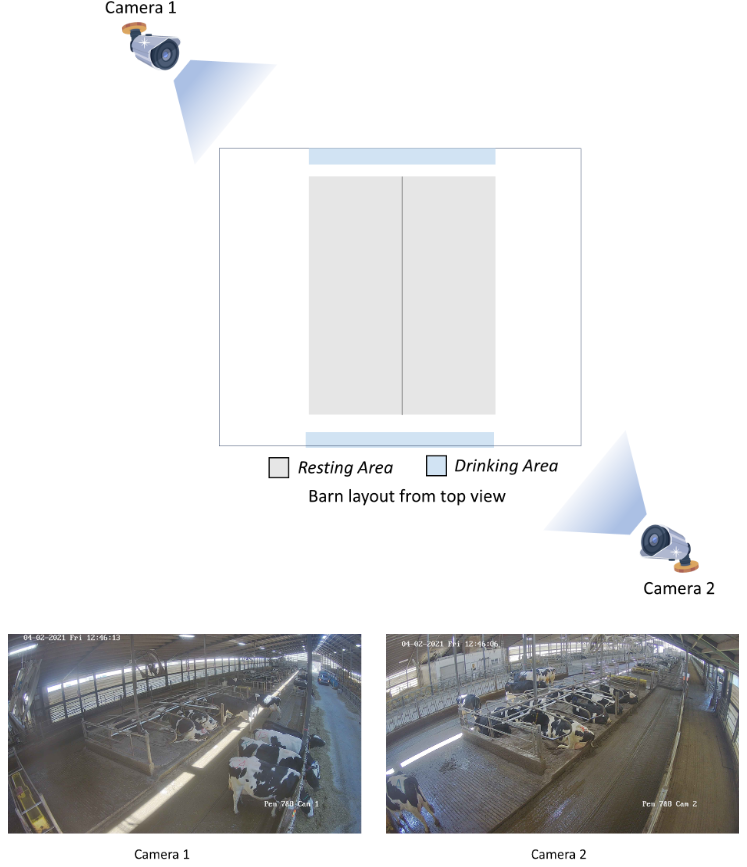

We employ YOLOv7 (Wang et al., 2022) as our object detection model. It is a state-of-the-art real-time object detector in terms of both speed and accuracy. Images/Videos captured by a camera in the barn serve as the input to our cattle detector model. We train the model for the task of cattle detection using our labeled dataset of dairy barn images and annotations. The dataset was collected using a video surveillance system of two cameras at Emmons Blaine Dairy Cattle Research Center in Arlington, Wisconsin. The dataset consists of a wide variety of cows in terms of location, orientation, occlusion, and postures. Our dataset consists of 2023 annotated images, which were split into train, validation, and test datasets as described in Table 1.

|

Dataset split |

Number of images |

|

Train data |

1,447 |

|

Validation data |

192 |

|

Test data |

384 |

Table 1. The dataset size and splits used for training and evaluation of the cattle detection model

Table 2 lists important hyperparameters used to train the model.

|

Hyperparameters |

Value |

|---|---|

|

Epochs |

300 |

|

Batch size |

16 |

|

Optimizer |

Stochastic Gradient Descent |

|

Learning rate |

0.001 |

Table 2. Hyperparameters used to train the cattle detection model

The output of cow detection model provides us with important information on the location of the cows in an image, which serves as input to our subsequent steps in the methodology as shown in Figure 2.

Individual Cow Identification

Following the initial step of cow detection, the next phase in our methodology consists of individual cow identification. Once the presence of cows is predicted in an image through the cow detection process, we isolate and extract individual cow images from the original input image. The bounding boxes obtained from the previous step give us the precise localization of each cow within the image, allowing us to create generate the image crops, each containing a single cow. These individual cow images serve as the input to our identification model as shown in Figure 2 (b).

To accomplish the task of individual cow identification, we employ a convolutional neural network (CNN) as our image classification model. This model is specifically designed to classify each cropped image into one of 16 predefined labels. These labels represent distinct identities assigned to individual cows within the monitored barn environment.

The image classification model, trained on a dataset that encompasses the diversity of cows present in the barn, has the capability to recognize unique features, patterns, or characteristics associated with each cow. This recognition enables the assignment of individual IDs to the cows, facilitating subsequent tracking and monitoring of their activities.

We use EfficientNet (Tan et al., 2019) as our model architecture since it offers a good balance between model size, accuracy, and speed. The classification head of the model is modified such that the last layer consists of 16 nodes to output 16 values between 0 and 1, representing the probability scores for each cow. We train the model using 12,547 annotated images which form the training dataset for our model. These images are obtained by cropping the bounding box regions of each cow in the original images. Each image crop is saved as a separate image file and labeled with the ID of the cow present in the image crop. Our validation data consists of 1,656 samples which are used to select the best hyperparameters. The model performance is evaluated on 3,086 test images.

|

Dataset split |

Number of images |

|

Train data |

12,547 |

|

Validation data |

1,656 |

|

Test data |

3,086 |

Table 3. The dataset size and splits used for training and evaluation of the cattle identification model

The model was trained for 100 epochs using cross-entropy loss function. The best model is saved by evaluating it on the validation set after each epoch. Important training hypreparameters are listed in table 4.

|

Hyperparameters |

Value |

|---|---|

|

Epochs |

100 |

|

Batch size |

32 |

|

Optimizer |

Stochastic Gradient Descent |

|

Learning rate |

0.001 |

Table 4. Hyperparameters used to train the cattle identification model

This step of individual cow identification provides not only accurate detection of cattle but also the ability to differentiate individual cows within the herd, enabling personalized monitoring of the cows.

Activity Recognition

Following the steps of cow detection and identification, the next phase in our methodology consists of the critical task of activity recognition. We employ an image classification model trained to distinguish between two fundamental cattle behaviors: "standing" and "lying". As in the previous step of cow identification, individual cow images are cropped from the original input image using the bounding box coordinates generated in the cow detection step as shown in Figure 2 (c). The image classification model is designed to analyze each cropped cow image and determine whether the cow is in a "standing" or "lying" position. This determination relies on the recognition of distinct visual cues and patterns associated with these activities, such as posture, limb positioning, and overall body orientation.

As in the step of individual identification, we use EfficientNet (Tan et al., 2019) as our model architecture. The classification head of the model is modified to adapt to the task of binary classification. It is trained using 2,513 annotated images which form the training dataset for our model. These images are obtained by cropping the bounding box regions of each cow in the original images. Each image crop is saved as a separate image file and labeled with the ID of the cow present in the image crop. Our validation data consists of 384 samples which are used to select the best hyperparameters. The model performance is evaluated on 736 test images.

|

Dataset split |

Number of images |

|

Train data |

2,513 |

|

Validation data |

384 |

|

Test data |

736 |

Table 5. The dataset size and splits used for training and evaluation of the activity recognition model

The model was trained for 100 epochs using cross-entropy loss function. The best model is saved by evaluating it on the validation set after each epoch. Important training hyperparameters are listed in table 6.

|

Hyperparameters |

Value |

|---|---|

|

Epochs |

100 |

|

Batch size |

32 |

|

Optimizer |

Stochastic Gradient Descent |

|

Learning rate |

0.001 |

Table 6. Hyperparameters used to train the activity recognition model

The utilization of the activity recognition model not only enhances our understanding of individual cattle behavior but also provides valuable insights into the collective dynamics of the herd. This information is integral for farm management and the early identification of potential issues, including environmental discomfort and health concerns such as heat stress.

The incorporation of activity recognition into our methodology complements the broader objective of comprehensive cattle monitoring. By providing insights into the behavior of each cow, we enable prompt responses to changing conditions, contributing to improved animal welfare and the overall efficiency of dairy farming operations.

Generation of a Comprehensive 2D Barn Map

Building upon the insights and data obtained from the preceding steps in our methodology, we generate a 2D map representing the locations, ID, and activities of the cows within the barn as shown in Figure 2 (c).

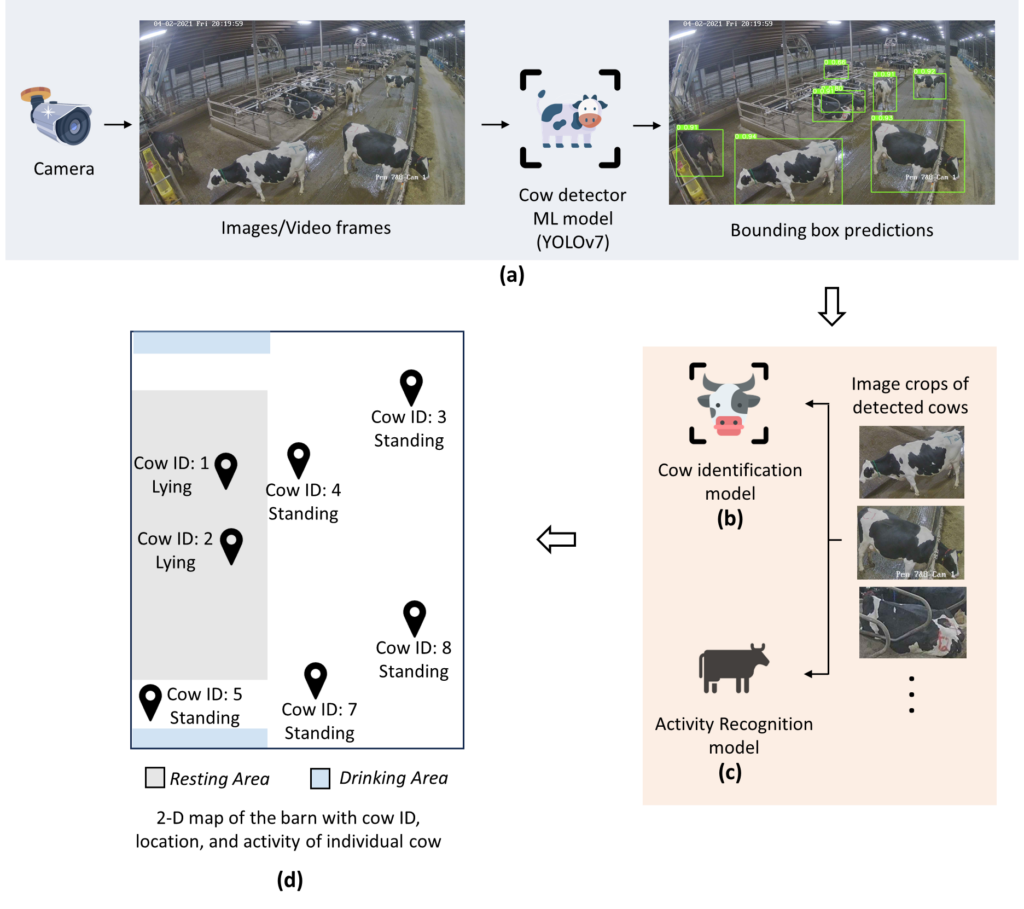

The bounding boxes obtained in the first step of cow detection are with respect to the frame of reference of a 2D image. The top-left corner of the image serves as the origin and the coordinates representing the bounding box predictions are measured with respected to this origin in terms of pixels. To translate these bounding box detections into a real-world frame of reference, we employ a technique known as perspective transformation. It is a type of linear geometric transformation used to transform image from one perspective (e.g. isometric view) to another (e.g. top view). As shown in Figure 3 below, perspective transformation maps the ground plane in the barn to a 2D plane in the map.

This transformation is instrumental in mapping points on a plane from the isometric view of the image frame to a top view that aligns with the barn's actual layout as shown in Figure 3.

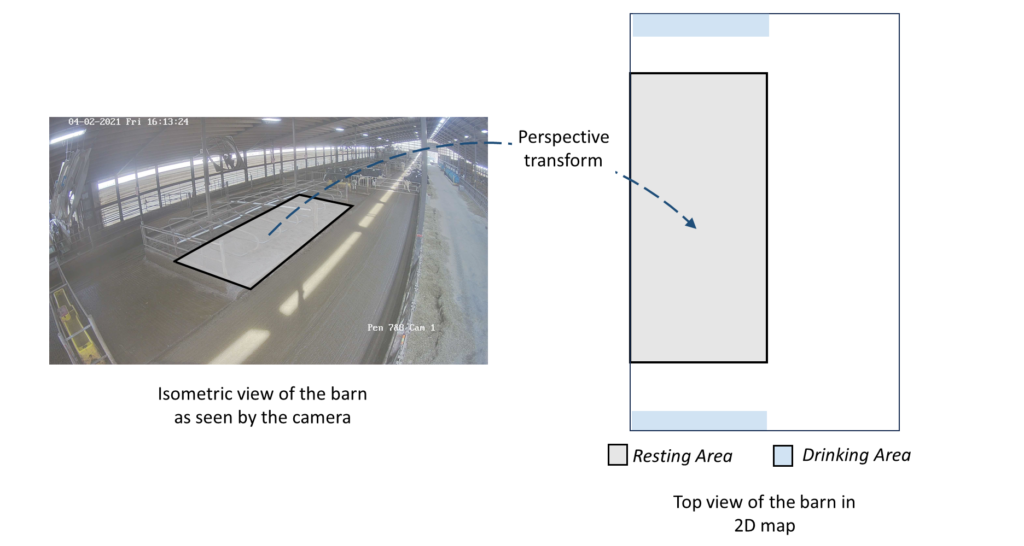

Mathematically, perspective transform is a linear transformation obtained by multiplying the input points with a transformation matrix as shown below.

Since the bottom-left and bottom-right points of the bounding box can be approximated to be lying on the ground plane, we calculate the mean of these points to obtain the location of the cows on the ground plane of the image. Perspective transform is then applied to these points to obtain the location of the cows in the top view. Additionally, we incorporate the unique cow IDs established through the individual cow identification model, as well as the recognized activity labels derived from the activity recognition model. The resulting 2D barn map, enriched with cow IDs and activity information, offers an intuitive overview of the herd's dynamics as shown in Figure 2 (c). This map serves as a valuable tool for farm management, allowing for the efficient monitoring of each cow's location, identification, and activity status.

Integration of Multi-Camera Setup

While the previous steps successfully yield a 2D barn map for individual camera inputs, the expansion of our monitoring capabilities to encompass a multi-camera setup necessitates a coherent strategy for merging the results and generating a unified 2D map that covers regions of overlapping camera fields of view. This consolidation process ensures a holistic representation of the barn environment, especially when different cameras capture the same cows. Figure 4 shows the images captured by two cameras installed diagonally across the pen, where multiple cows are visible in both the cameras.

In such a scenario where multiple cameras are deployed, some cows appear only in a single camera view whereas the rest appear in both the camera views. To create the consolidated 2D map for such shared areas, we apply a weighted average approach based on the predicted locations of these common cow detections.

The weights assigned to each detection are derived from the confidence scores provided by the detection models. These confidence scores signify the certainty of detections - higher scores correspond to more confident detections. As such, they serve as a basis for determining the contribution of each camera's observation to the final 2D map for the overlapping regions. Thus, the final location of the cow is determined by the weighted average of the locations predicted in the images of each camera, with the weights are the normalized confidence scores for the bounding box detections.

This technique takes into account not only the presence and locations of cows within overlapping regions but also the level of confidence associated with each detection. This results in an accurate and comprehensive representation of cow positions and activities across the entire barn, regardless of the number of cameras used. By integrating data from multiple camera sources and merging it into a unified 2D map, our methodology accommodates a broader field of view, enhances monitoring precision, and provides a more holistic view of the barn environment. This consolidation facilitates the comprehensive management and monitoring of cattle, contributing to improved animal welfare and the efficiency of dairy farming operations.

References

- Wang, Chien-Yao, Alexey Bochkovskiy, and Hong-Yuan Mark Liao. "YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023.

- Tan, Mingxing, and Quoc Le. "Efficientnet: Rethinking model scaling for convolutional neural networks." International conference on machine learning. PMLR, 2019.

In this section, we present the results obtained from the implementation of our comprehensive cattle monitoring methodology. The results encompass key performance metrics for each phase of our approach, including cattle detection, cow identification, and activity recognition. The outcomes shed light on the efficacy and accuracy of our methodology in facilitating the monitoring of cattle within a barn environment.

Cow Detection

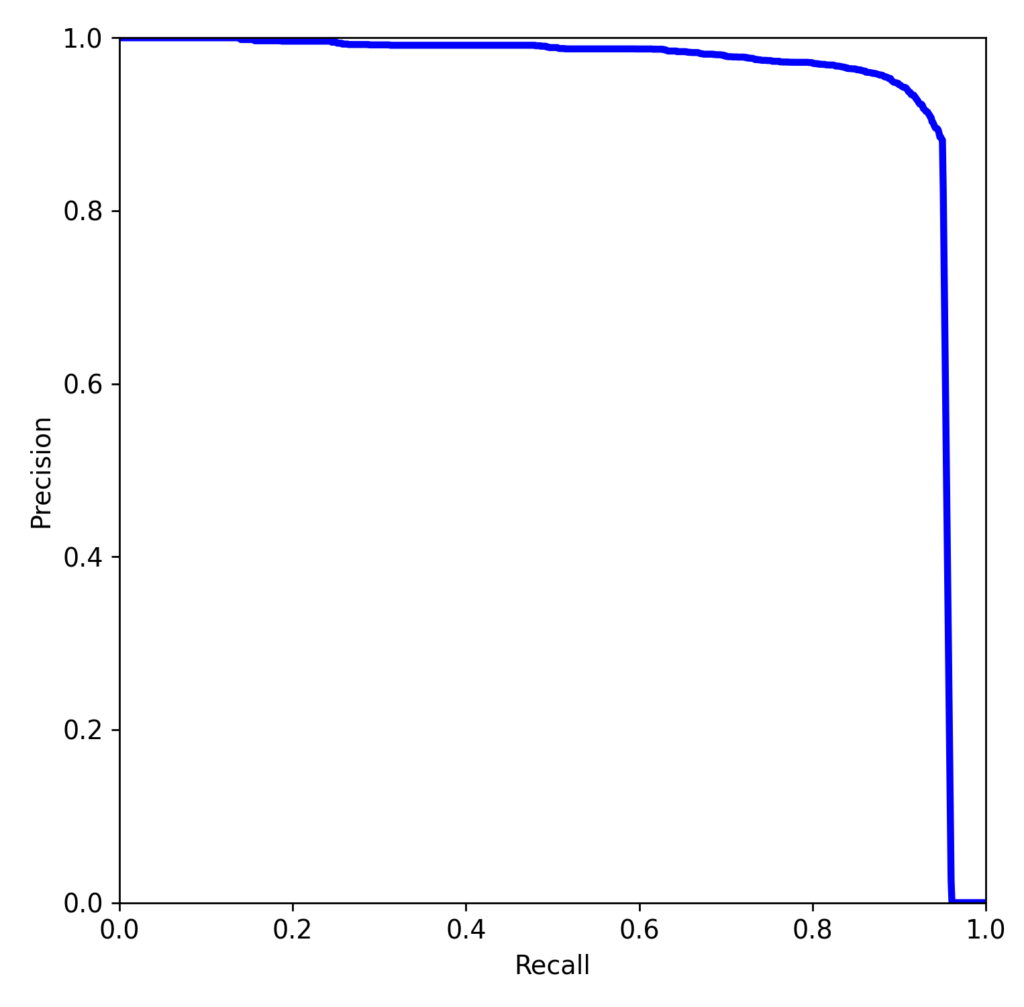

The first component of our methodology involves the detection of cattle within the barn using the YOLOv7 object detection framework. We assessed the performance of this detector using Precision-Recall curve and mean Average Precision (mAP) metric at an Intersection over Union (IoU) threshold of 0.5. These are standard metrics employed in the evaluation of object detection models.

Figure 5 shows the Precision-Recall curve evaluated on the test data set for cattle detection. The Precision-Recall (PR) curve is plotted by varying the confidence threshold of the model. At different thresholds, precision and recall values are calculated, creating points on the graph. Connecting these points forms the PR curve, where precision is on the y-axis and recall is on the x-axis. As illustrated in the PR curve, our cattle detection system exhibits a compelling performance profile. A high value of precision signifies its capacity to accurately locate cattle instances within the barn environment. At the same time, it demonstrates a high recall value, ensuring that a significant proportion of true positive cases is successfully detected.

Further, we calculate mean Average Precision (mAP) score, a metric that captures the overall performance of a model in object detection. The mAP score takes into account the precision-recall values at different decision thresholds and provides a single value that quantifies the quality of the model's predictions. On the test dataset, our model achieves a high mAP score of 0.9390 indicating the efficacy and robustness of our approach in accurately locating cattle within the barn. Figure 4 shows a sample output of the trained model on a test sample. We observe that the model successfully detects cows in a wide variety of cows in terms of location, orientation, occlusion, and postures.

Further, we calculate mean Average Precision (mAP) score, a metric that captures the overall performance of a model in object detection. The mAP score takes into account the precision-recall values at different decision thresholds and provides a single value that quantifies the quality of the model's predictions. On the test dataset, our model achieves a high mAP score of 0.9390 indicating the efficacy and robustness of our approach in accurately locating cattle within the barn. Figure 6 shows a sample output of the trained model on a test sample. We observe that the model successfully detects cows in a wide variety of cows in terms of location, orientation, occlusion, and postures.

Cow Identification

The subsequent step in our methodology focuses on individual cow identification, a crucial task for personalized cattle monitoring. We evaluated the accuracy, precision, recall, and F-1 scores (macro average to mitigate class imbalance, if any) of our cow identification model. The values are provided in Table 7.

|

Performance metric |

Value |

|

Accuracy |

0.8927 |

|

Precision |

0.8896 |

|

Recall |

0.8951 |

|

F-1 score |

0.8904 |

Table 7. Cow identification model performance evaluated on test dataset

The obtained accuracy score in this phase attests to the effectiveness of our identification model in distinguishing and labeling each cow within the barn accurately.

Activity Recognition

The activity recognition phase is designed to classify cow behavior into "standing" or "lying". We evaluated the accuracy, precision, recall, and F-1 scores (macro average to mitigate class imbalance, if any) of our activity recognition model. The values are provided in Table 8.

|

Performance metric |

Value |

|

Accuracy |

0.9728 |

|

Precision |

0.9595 |

|

Recall |

0.9547 |

|

F-1 score |

0.9571 |

Table 8. Activity recognition model performance evaluated on test dataset

The activity recognition accuracy underscores the reliability of our approach in gauging the behavior and well-being of cattle based on their postures and activities.

Discussion of Results

The results obtained from our methodology demonstrate its effectiveness in cattle monitoring within a barn environment. The robust performance in cattle detection, individual cow identification, activity recognition attests to the method's practical applicability in enhancing animal welfare and farm management.

Accurate cattle detection forms the foundation for subsequent monitoring phases, while individual cow identification enables personalized monitoring. The integration of these components culminates in a comprehensive understanding of cattle behavior and activity, contributing to improved decision-making.

Furthermore, the integration of data from multiple cameras within the multi-camera setup allows for a broader field of view, enhancing the precision and comprehensiveness of cattle monitoring.

The results and insights obtained from our methodology demonstrate a significant step towards the development of an advanced cattle monitoring system. It holds promise for a range of applications, from early heat stress prediction to optimizing farm management practices. The robustness of our approach, as evidenced by the quantitative results, underscores its potential to enhance animal welfare, increase farm productivity, and contribute to the overall sustainability of dairy farming operations.

Educational & Outreach Activities

Participation Summary:

Dr. Van Os, a collaborator on this project, reached out to U.S. dairy industry personnel who serve as supporting advisors to dairy farmers on topics relating to heat stress, heat abatement, and ventilation, including dairy consultants, nutritionists, veterinarians, extension educators, and fan/ventilation-system manufacturers and distributors. These audiences were reached through both in-person workshops and webinars delivered by Dr. Van Os.

Dr. Dorea, a collaborator on this project, delivered webinars on the applications of computer vision in the dairy industry.

The project's findings and dataset will be disseminated to researchers via publications, conference talks, posters, and seminars.

Project Outcomes

Economic benefits for the farmers:

The economic growth of the dairy sector is closely intertwined with the health and productivity of the cattle. Heat stress in dairy cattle results in reduced milk production, reduced reproductive efficiency, and increased fatalities, leading to financial losses to the farmers and ranchers. The dairy industry in the North Central Region suffers losses of $249 million in revenue every year due to heat stress in cattle (St-Pierre 2003 et al.). The economic growth of the dairy sector is closely intertwined with the health and productivity of the cattle. Accurate and precise detection of heat stress can help farmers maintain the health of their herds and optimize milk production. This, in turn, enhances the profitability and economic sustainability.

Environmental benefits for the farmers:

Current heat abatement methods using fans, sprays, and soakers use large amounts of electricity and water - as high as 27 liters of water per cow daily, making water and energy footprint a major sustainability concern. The project can aid the development and enable the use of cooling mechanisms that are more precise, optimal, and cost effective. For instance, optimized cooling mechanisms can reduce water consumption by 50% and energy consumption by 18% (Drwencke et al. 2020).

Social benefits for the farmers:

The proposed research bridges the gap between farmers/ranchers and the recent AI technology. The outcome of this research will enable more efficient and optimal management of dairy cattle and barns. The quality of life will be enhanced through increased profitability in dairy products. Healthy and productive dairy farming operations contribute to the social fabric of rural communities. The sustainability of these farms helps maintain jobs and supports the local economy, which benefits not only the farmers but also the broader community. Implementing innovative technologies like the cattle monitoring system can create opportunities for farmers to engage in learning and professional development, as they continuously adapt to evolving practices and technologies. Further, early prediction of heat stress will promote well-being and health of cattle, which will help address public concerns about the sustainability in food production practices that include the welfare of food-producing animals.

Learning outcomes:

Farmers/ranchers will learn how AI can be leveraged for early prediction of heat stress in dairy cattle. They will learn about the changes in behavior in cattle which can be early indicators of heat stress and how automation in the task of behavior analysis using AI can be used in heat stress prediction. It will make them knowledgeable in performing data-driven decision-making to maximize profitability with minimal resources. The project will spread awareness among farmers and ranchers on reducing energy and water consumption through precision livestock and efficient cooling mechanisms. They will learn how they can play a central role in reducing the carbon footprint of a barn leading to sustainable livestock practices.

Researchers can use the dataset collected and labeled as a part of this project to advance their research in the domains of heat stress and mitigation, animal welfare, and sustainable agriculture using AI.

Action outcomes:

Farmers/ranchers will use the outputs of the project to mitigate financial losses incurred due to heat stress in cattle using efficient cooling techniques, for continuous monitoring of activities of the cattle, and to improve animal welfare. Apart from the direct utility for farmers/ranchers, the output of the project can also be used by engineers and researchers to design energy-efficient, optimal, and cost-effective cooling mechanisms to mitigate heat stress in dairy cattle. Eventually, the outputs of this project will also encourage farmers to move toward more energy-efficient and precise heat stress abatement techniques, contributing to more sustainable livestock practices.

Moreover, the system aids in the broader context of sustainability in dairy farming. By reducing the impact of heat stress, the system enables more consistent and optimal herd performance, which translates into higher productivity. This, in turn, contributes to the overall sustainability of the dairy industry by ensuring efficient resource utilization and maximizing economic returns for farmers. Further, our system exemplifies the potential of cutting-edge technology to advance modern agriculture.

- Enhanced Understanding of Technology in Agriculture: The project served as an immersive learning experience, expanding our understanding of how modern technology, particularly computer vision and machine learning, can be effectively leveraged to advance sustainable agricultural practices. We gained insights into the intricacies of developing a computer vision system tailored for livestock monitoring, highlighting the immense potential of technology to optimize farm operations, enhance animal welfare, and reduce environmental impact.

- Environmental Awareness: Our involvement in the project raised our awareness of the environmental implications of agricultural activities. We developed a deeper appreciation for the pivotal role that sustainable agricultural practices play in minimizing the environmental footprint, conserving natural resources, and mitigating waste generation.

- Skill Advancement: We underwent a significant skill development journey. We acquired expertise in computer vision, machine learning, and data analysis, which are instrumental in creating efficient and sustainable solutions for agricultural challenges. The hands-on experience gained through the project cultivates a deep practical understanding of how technology can be harnessed to optimize agricultural processes. These newly acquired skills equipped us to contribute to the broader agricultural technology landscape.

- Sensitization to Animal Welfare: Our work on livestock monitoring heightened our sensitivity to animal welfare issues in agriculture. We became aware of the role that technology plays in improving the lives of farm animals

- Attitude Toward Innovation and Collaboration: The project fostered a more proactive and innovative mindset. We developed a keen interest in exploring cutting-edge solutions to solve real-world problems. Sustainable agriculture often demands collaboration with experts from diverse fields. We developed a keen interest in engaging in interdisciplinary collaboration, fostering a broader network of experts and insights.

In summary, the knowledge gained throughout the project was not limited to technical aspects alone. It encompassed a holistic understanding of the vital relationship between technology, sustainability, and animal welfare in the realm of agriculture. The project's impact extended beyond the research domain, instilling an enduring appreciation for the complexities and opportunities present in the pursuit of sustainable agricultural practices.

Information Products

- Mitigating Heat Stress in Dairy Cattle using a Physiological Sensing-Behavior Analysis-Microclimate Control Loop (Article/Newsletter/Blog)

- AI for Agriculture (Mobile/Desktop Application)