Final report for GNE22-285

Project Information

Green fruit thinning is the process of removing young fruit from trees to increase the size of remaining fruit. Previous applications of robotic technologies in agriculture imply the feasibility of an automated green fruit thinning system, which could mitigate drawbacks present in more traditional thinning methods, e.g., non-selective thinning, significant labor requirements, etc. In this project, a robotic green fruit thinning system was developed and tested. The project consisted of three main objectives: 1) to develop a stem-cutting end-effector prototype; 2) to develop a 3D vision system for detecting coordinates of target fruit and boundaries in point-cloud form in an orchard environment; and 3) to integrate the components developed in objectives 1 and 2, along with those developed prior to the project start date, to create a robotic green fruit thinning system.

Overall, the project was successful. Green fruit removal dynamics experiments showed stem cutting to be a less damaging method for green fruit removal than pulling. The stem-cutting end-effector was able to remove 90% or more of fruit on the first attempt in experiments. Mask R-CNN for instance segmentation achieved an AP of 83.4% for all green fruit masks, and 91.3% for green fruit masks of sizes greater than 322. PCA-based orientation estimation on 2D masks estimated over 75% of 2D orientations within 30 degrees of ground truth. Fruit-stem pairing achieved an average precision of 81.4% on all masks and 90.6% of masks with assigned orientation labels, and the LSTM-based clustering obtained a clustering success rate of 68.4%. Preliminary neural network-based 3D orientation estimation estimated yaw within 30 degrees of ground truth for 84.2% of the time, and pitch within 30 degrees of ground truth for 93.4% of the time. Finally, by integrating the machine vision system, the stem-cutting end-effector and a UR5e robotic arm, a robotic green fruit thinning system was developed and tested in the orchard, which was able to remove 87.5% of all target fruit. The results obtained during this project show the technical viability of robotic green fruit thinning, and future work will ideally lead to the eventual development of a commercial robotic green fruit thinning system for use in apple orchards. Such a system would make apple production more profitable for growers by reducing thinning labor costs and the need for chemical thinning.

The primary goal of this project is the development of a robotic system for green fruit thinning. The following are the objectives for the project:

Objective #1: Green Fruit Removal End-Effector Development

An end-effector for green fruit thinning will be developed. Based on the results of prior green fruit removal dynamics experiments conducted by the project team members, a stem-cutting mechanism will be used for simplicity and effectiveness of design. The end-effector design will be based on garden snippers. The end-effector will be designed such that no unintended damage is caused to trees and their structures The performance of the end-effector will then be evaluated in orchard tests. The end-effector performance will be compared to that of a previous designed developed previously by the project team members. It is expected that the end-effector will thin green fruit with a high success rate, while causing no damage to the tree or unintended removal of non-target fruit.

Objective #2: 3D Vision for Robotic Green Fruit Thinning

A 3D vision system will be developed for this project. The primary purpose of the 3D vision system will be to determine the 3D coordinates of target green fruit and a boundary map for collision-free path planning. The 3D vision system will build on results obtained from deep learning-based computer vision algorithms implemented for robotic green fruit thinning by the project team members. Data obtained during Spring 2022 including RGB-D (color-depth) images will be used to aid in developing and implementing algorithms for 3D vision. A 3D vision system than can determine boundary points and fruit locations with high accuracy is expected.

Objective #3: Robotic Green Fruit Thinning System

A completed green fruit thinning system will be developed. The system will utilize the components developed for objectives #1 and #2 as well as components developed before the proposed project period. The end-effector will be mounted onto the robotic manipulator present in the lab facilities used for this project. The vision system will be used, as described in Objective #2, to determine target fruit locations and boundaries for the robotic manipulator to navigate. Path planning algorithms developed before the project start period will be used to determine the shortest path between multiple fruit. The performance of the system will be evaluated in orchard tests. A system that thins green fruit from a tree with high accuracy and success with no collisions with obstacles is expected.

Green fruit thinning is the process in which the number of fruits is reduced early in the growing season. It is the final opportunity to set the final fruit amount and placement on a tree limb (Lewis, 2018). The three main purposes of thinning, in order of importance, are the following: 1) to improve the size and quality of the remaining fruits; 2) to reduce the risk of biennial bearing, i.e., the overproduction of a crop one year, and the underproduction of the crop the next year; and 3) to reduce the occurrence of limb breakage resulting from leaving too much fruit on it. Also, the thinning of fruit reduces the risk of the spread of pests and diseases (Vanheems, 2015).

Several methods for green fruit thinning have been employed, including manual (i.e., by hand), chemical, and mechanical thinning. Hand thinning allows for thinning to be highly selective, i.e., one can choose specifically which fruits on a given branch are removed. However, this process is very time consuming, and the high labor requirements make hand thinning infeasible at large scale. In chemical thinning, chemical thinner is applied to the trees at a fixed rate within an orchard. This method of thinning is an established and essential practice performed by fruit growers each year. For many apple growers, chemical thinning is becoming the single most important spray practice through the season. However, one main problem with chemical thinning is the inconsistency of response due to environmental and tree factors. The correct timing of application and choice of thinner formulation are vital factors in optimal chemical thinning that are dependent on fruit varieties and growing stages (Tyagi et al., 2017). Mechanical thinning has shown some success in fruit thinning, with an example being a drum shaker, discussed by Miller et al. ( 2011), which thinned 37% of apples at the green fruit stage and reduced follow-up hand-thinning costs significantly. However, similarly to chemical thinning, mechanical thinning has been shown to be non-selective, and it can cause damage to the trees.

There are pros and cons to each of the previously-discussed thinning methods. It is desired to develop a robotic system that is as selective as hand thinning, while being cost-effective like mechanical and chemical methods. This research investigated green fruit-thinning dynamics and the development of a green fruit-thinning system, specifically for apples. The project consisted of the following three research objectives: 1) the development of a stem-cutting end-effector; 2) development of a 3D system to detect target green fruit in an orchard environment, as well as generate a boundary map for collision-free path planning; and 3) the integration of the robotic green fruit thinning system by combining the 3D vision system and stem-cutting end effector with a robotic manipulator.

Cooperators

Research

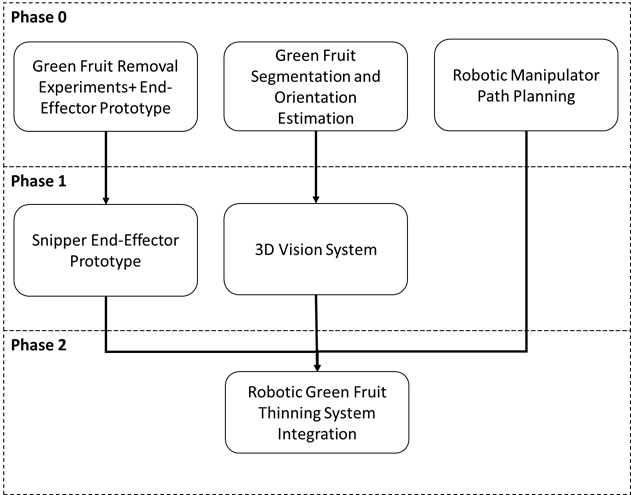

The project consisted of three primary phases. The first phase was a pre-projected phase, named Phase 0, which included all work done for the development of a robotic green fruit thinning system before the proposed project start date. This included initial green fruit removal dynamics experiments in prototype evaluation, green fruit segmentation and orientation estimation algorithms implementation, and robotic manipulator path planning for green fruit thinning. The next phase, named Phase 1, included the development of the stem-cutting end-effector and 3D vision system described in objectives #1 and #2, respectively. These objectives were completed in parallel. Lastly, Phase 2 integrated the components developed in Phase 0 and Phase 1 to develop a robotic system for green fruit thinning. A flowchart of the project is shown in figure 1.

Figure 1. Methodology flowchart for the proposed robotic fruit thinning system

Objective #1: Green Fruit Removal End-Effector Development

1.1 Measuring of Green Fruit Removal Dynamics

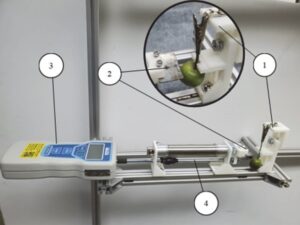

The force required to remove green fruit from a branch is a critical parameter for designing an effective robotic green fruit thinning end-effector. It is important to select a proper actuator that can provide sufficient force to remove targeted fruits. For this study, the dynamics for pulling and stem-cutting were assessed: (Figure 2). Experiments were conducted to test the two methods in May-June, 2021, at the Fruit Research and Extension Center in Biglerville, PA. Three different apple cultivars were used for the experiments including 1) Fuji, 2) Golden Delicious, and 3) GoldRush.

Figure 2: Test setup for green fruit removal force measurement, a) pulling force measurement, b) stem-cutting force measurement (1: stem-holding mount; 2) stem-cutting blade; 3) digital force gauge; 4) force-transfer rod)

1.2 Green Fruit Removal End-Effector Development

A stem-cutting end-effector (figure 3) was developed based on the results of previous fruit-removal dynamics experiments conducted by the project team members. The end-effector design incorporated a PVC pipe with a DC motor attached to a side, and a utility blade attached to the shaft of the motor. For a given target fruit, the PVC pipe would encapsulate the fruit, and the motor would be activated to cut the stem of the fruit against a 3D-printed cutting mount. This prototype was found to have a high fruit-removal success rate. However, it was somewhat difficult to maneuver in an orchard environment when attached to a robotic manipulator. Neighboring fruit and canopy would need to be moved in some cases to allow the target fruit to be properly encapsulated. A snipper-based end-effector is believed to be easier to maneuver, as such an end-effector could move directly to the stem of the target fruit without having to encapsulate the fruit itself first.

Figure 3: Stem-cutting end-effector prototype developed prior to the proposed project period.

The end-effector prototype was tested and evaluated at the Penn State Fruit Research and Extension Center in Biglerville, PA. The prototype was evaluated with a handheld bar and also mounted onto a UR5e robotic manipulator (Figure 4). Fuji and Gold Rush trees located at the center were used for testing; 50 fruit for each cultivar were tested. The prototype was evaluated on its success rate in fruit removal, i.e., the ratio of successful fruit removal attempts to total fruit removal attempts, and its ability to maneuver around obstacles in the orchard environment while attached to a robotic manipulator was qualitatively determined.

Figure 4: Field tests of the green fruit thinning end-effector, left) handheld type, and right) attached to a UR5e robotic manipulator

The testing procedure for each test fruit includes: 1) record the fruit diameter and length and the stem diameter and length; 2) place snippers of end-effector around the stem of the target fruit; 3) record the angle between the snippers and stem; 4) actuate the end-effector motor and record whether or not fruit removal was successful. After testing and data recording, the success rate of fruit removal, as described previously, will be obtained. One-way ANOVA tests were applied to obtain relationships between fruit/stem dimensions, cutting angle, and the success of fruit removal.

Objective #2: 3D Vision for Robotic Green Fruit Thinning

A machine vision system was developed to detect the 3D coordinates of green fruit, as well as determine boundary coordinates in the form of a point cloud. Data was obtained in green fruit stage in 2022 and 2023 for processing and algorithm development. The Intel® RealSense™ Depth Camera D435i was used to obtain RGB-D (color-depth), where depth images contain pixels whose values indicate the distances of corresponding points from the camera in an environment. The camera was mounted to the UR5e Robotic manipulator, and the UR5e robot was attached to a utility vehicle (UV). 15 Fuji, 10 Golden Delicious, and 10 GoldRush trees located at the Penn State Fruit Research and Extension Center were used for image acquisition. The camera took images from a range of 20-100 cm. The fruit sizes were between 15-30 mm. For each tree the UV drove up to align the mounted robotic manipulator with the tree. The robotic manipulator was manually positioned to take images of each suitable cluster within the tree. At least three images of each cluster were taken. In total, about 1000-1500 RGB-D images were taken.

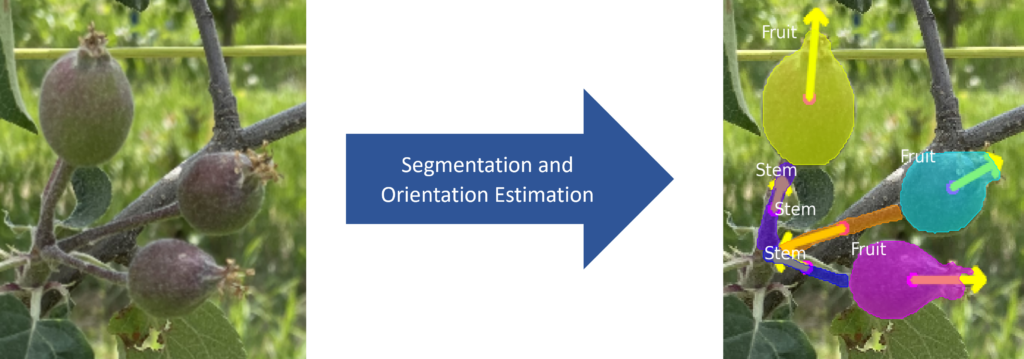

2.1 Green Fruit Detection and Orientation

The color images were used for green fruit and stem segmentation and orientation estimation, where segmentation is the generation of pixel-wise masks of a target object. The project team members implemented algorithms for green fruit and stem segmentation and orientation estimation prior to the project start date, of which an example can be seen in figure 5.

Figure 5. Green fruit segmentation and orientation estimation.

2.2 Green Fruit-Stem Pairing and Clustering

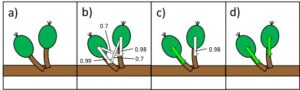

A shallow neural network was used as the basis for the fruit and stem pairing algorithm. An illustration of this algorithm is shown in Figure 2. Pseudocode for this algorithm can be found in the Appendix in Figure 6. First, all possible fruit and stem pairs are put in a list. The list is then iterated through, and the corresponding features for each possible fruit and stem pair are processed by the pairing neural network to generate pairing scores. The highest-scoring possible pair is first declared as a pair if the corresponding score lies above the designated threshold value. This process is repeated for all remaining possible pairs until no possible pairs remain with scores above the threshold value.

Figure 6: Illustration of the fruit and stem pairing algorithm. a) an example image containing two fruits and two stems. b) the neural network generates scores for each possible fruit and stem pair. c) the highest-scoring pair is declared to be a pair, and all other possible pairs associated with the paired fruit and stem are eliminated from future pairing consideration. d) the next highest-scoring pair is declared as a pair in a similar manner as the previous declared pair.

Figure 6: Illustration of the fruit and stem pairing algorithm. a) an example image containing two fruits and two stems. b) the neural network generates scores for each possible fruit and stem pair. c) the highest-scoring pair is declared to be a pair, and all other possible pairs associated with the paired fruit and stem are eliminated from future pairing consideration. d) the next highest-scoring pair is declared as a pair in a similar manner as the previous declared pair.

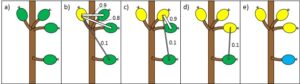

A LSTM-based clustering algorithm was used for green fruit clustering, the procedures are as following: First, an initial green fruit is chosen randomly, and the neural network is applied to the fruit which will generate a set of output states. Then, the network is applied to all other fruits with the stored states from the first fruit, to obtain outputs for all possible two-fruit combinations containing the first fruit. The two-fruit combination with the highest score above a clustering threshold is declared a cluster, while if no combination gives a score above the threshold, the initial fruit is declared to be a cluster of one fruit, and the algorithm will move on to the next fruit. If a two-fruit combination is successfully determined to be a cluster, each remaining unclustered fruit will be processed independently, then the three-fruit combination with the highest score above the clustering threshold will be declared a cluster. This process for the given cluster repeats until a cluster obtains the upper limit of six fruit. The algorithm runs until there are no more fruit within an image. An illustration for this algorithm is shown in Figure 7.

Figure 7. Illustration of the LSTM-based fruit clustering algorithm, with the threshold value being chosen as 0.5. a) the example image containing two clusters. b) one fruit is chosen first for clustering and the algorithm generates a score for each other fruits to the target fruit. c) the highest scoring fruit (above 0.5) is added to the target fruit as a cluster, and the process repeats with the second fruit. d) the third fruit is added to the cluster, and the process repeats for the last remaining fruit. e) the score lies below the threshold value, it is not added to the cluster. Since no other fruit remain, the last fruit is set as its own cluster.

2.3 3D Green Fruit Orientation Estimation

Once green fruit segmentation was completed, the 3D orientation of each fruit was estimated. There are two parameters that need to be estimated to sufficiently quantify the orientation of green fruit for thinning purposes: yaw (which way in the image plane the fruit is facing) and pitch (how far off the image plane the fruit is facing). In this study, this was accomplished using a deep neural network-based structure shown in Figure 8. A green fruit mask, generated by the Mask R-CNN, was taken as input. The range of pitch values is between -90 degrees and 90 degrees, with 90 degrees facing directly towards the camera, and -90 degrees facing directly away. The 3D orientation estimation network was trained using the training and validation dataset for a total of 200 iterations. The training dataset had data augmentation applied to increase its size by a factor of four, where each image had 90 degree, 180 degree, and 270 degree rotations.

Figure 8. The neural network was trained on the same dataset used for training the Mask R-CNN algorithm.

Objective #3: Robotic Green Fruit Thinning System

The end-effector and 3D vision system algorithms were integrated with a UR5e robotic manipulator to obtain a robotic green fruit thinning system (Figure 9). A series of tests were conducted to evaluate the integrated robotic fruit thinning system. Field evaluation was carried out in research apple orchards at Penn State FREC in May-June 2023. The Fuji and Golden Delicious cultivars were tested in these orchards. The performance of the fruit removal process and thinning targeted fruits, and the effects on other fruits/canopy are the major indicators to evaluate the overall performance of the thinning system. Before the test, the total number of fruits and clusters in targeted trees, and the number of thinned fruits and corresponding locations were recorded.

Figure 9. Integrated Robotic Green Fruit Thinning System

Results and Discussion for Objective 1

The results for the stem-cutting end-effector prototype experiments are shown in table 1. Among all the tests, including using the handheld prototype and robotic arm, high success rates of over 90% were achieved with single cut trial for all cultivars. In most cases, the end-effector could engage fruit without interference from obstacles, while for some fruits, leaves or shoots may need to be pushed aside. The GoldRush cultivar had a relatively lower success rate compared to the other two cultivars, which is possibly due to the shorter stem for these apples. The chances of cutting leaves or spurs were higher if the fruit stem was shorter. For densely-packed clusters, the targeted fruit could only be engaged within the PVC pipe either when other fruit in the cluster were pushed away, or the end-effector was offset. The major reason for the failures was due to obstacles such as leaves or spurs being stuck in the cutting pathway. A more powerful cutting mechanism may help improve the success rate, although it is not ideal to damage leaves or spurs during green fruit removal. Therefore, more effort should be put into the improvement of the mechanism to possibly move these leaves or shoots aside when engaging with the target fruits.

Table 1. The performance of the developed stem-cutting end-effector in the field tests

| Tests | Cultivars | Total No. Fruits | Removed Fruits | Success Rate |

| Handheld Prototype | Fuji | 50 | 47 | 94% |

| Golden Delicious | 50 | 48 | 96% | |

| GoldRush | 50 | 45 | 90% | |

| Robotic Arm Prototype | Golden Delicious | 25 | 23 | 96% |

Results and Discussion for Objective 2

1) Green Fruit Detection and Orientation

Table 2 shows the average precision of the green fruit and stem segmentation. Overall, the average precision for green fruit segmentation is better than that for stem. Green fruit segmentation results on all test dataset masks are reasonably high at average precision of 83.4%. Stem segmentation results on all masks, however, are notably lower at 38.9%. In general, performance for segmentation on each mask type is greatest for the largest mask sizes (>322), with green fruit and stem segmentation performances being 91.3% and 67.7%, respectively. The increase in performance when compared to using all masks is particularly notable for stems.

Table 2. The average precision of green fruit and stem segmentation

| Objects | Average Precision (AP) (%) | |||||

| Mask size (pixel) | Overall | >322 | 272 - 322 | 202 - 272 | <202 | |

| Green Fruit | 83.4 | 91.3 | 80.0 | 85.4 | 24.2 | |

| Stem | 38.9 | 67.7 | 64.5 | 57.0 | 30.5 | |

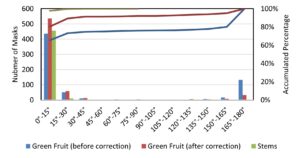

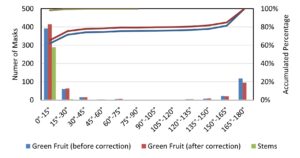

To evaluate the performance of the fruit orientation estimation with the segmented fruit masks by the developed Mask R-CNN algorithm, the fruit orientation estimation was conducted with both segmented fruit masks and the ground truth masks (manually labelled). The fruit orientation estimation results were calculated using the PCA method under the two conditions before and after correction was applied. Histograms were used to illustrate the instances of different angular error levels and the accumulated percentage of these angular errors as shown in Figure 10 and Figure 11. Overall, orientation estimation using the PCA method presented good performance with the majority of orientations being estimated within 15° of ground truth orientations.

Figure 10: Histogram of orientation estimation errors with all the ground truth (GT) fruit masks. Bars are the number of masks, and the lines are the accumulated percentages.

Figure 11: Histogram of orientation estimation errors with all the Mask R-CNN algorithm segmented masks. Bars are the number of masks, and the lines are the accumulated percentages.

2) Green Fruit-Stem Pairing and Clustering

Results for green fruit and stem pairing are shown in Table 3. The overall average precision for the test dataset reached 81.4%, which is an indicator of pairing fruits and stems with reasonable precision and recall. The Fuji cultivar has the best fruit and stem pairing AP, with the GoldRush cultivar’s being nearly as high, while the Golden Delicious cultivar had the lowest. This decline in performance could be attributed to the difference of fruits position in a cluster between Golden Delicious and other cultivars. For example, the images of Golden Delicious used show green fruit within clusters in close proximity each other, whereas for the other two cultivars, green fruit within clusters are more spaced out. The closer proximity of fruit for Golden Delicious could be leading for more false fruit-stem pairings.

Table 3. Results for green fruit and stem pairing with different cultivars

| Overall | GoldRush | Fuji | Golden Delicious | |

| Accuracy | 96.3% | 96.8% | 95.4% | 96.8% |

| AP | 81.4% | 82.4% | 83.2% | 75.2% |

Clustering results for each algorithm for each cluster size are shown in Table 4. Consistent with overall clustering performance, the LSTM-based clustering algorithm performs notably better than the OPTICS algorithm for most cluster sizes. This is especially the case for cluster sizes 1, 4, 5, where the LSTM-based algorithm outperforms the OPTICS algorithm implementations by several tens of percentage. However, for cluster sizes 2, the OPTICS algorithm using the fruit endpoint and stem centroid performs marginally better than the LSTM-based algorithm. Also, both the OPTICS implementations using the fruit and stem endpoints show better performance in comparison the LSTM-based algorithm. However, due to the small number of clusters that contain 6 fruits in the test dataset, the result cannot be claimed to be significant.

Table 4. Clustering performance for different clustering methods and different cluster sizes

| Cluster size | 1 | 2 | 3 | 4 | 5 | 6 |

| # clusters | 94 | 79 | 60 | 56 | 27 | 4 |

| LSTM-based | 67.00% | 64.60% | 75.00% | 62.50% | 85.20% | 50.00% |

| OPTICS- FC | 39.40% | 55.70% | 58.30% | 32.10% | 48.10% | 50.00% |

| OPTICS- FE | 29.80% | 67.10% | 61.70% | 46.40% | 59.30% | 75.00% |

| OPTICS- SC | 26.60% | 65.80% | 70.00% | 35.70% | 55.60% | 25.00% |

| OPTICS- SE | 22.30% | 63.30% | 70.00% | 23.20% | 44.40% | 75.00% |

3) 3D Green Fruit Orientation Estimation

The results for 3D orientation estimation on the test dataset are shown in Table 5. The estimation algorithm is able to estimate 9.2% more of pitches of fruit within 30 degrees of ground truth than yaw. This effect is more pronounced at the 45 degree threshold, where 10.8% more of pitches of fruit are estimated within 45 degrees of ground truth than yaw. In general, the percentages of pitch estimations within threshold lay within the 90% range, while those of yaw estimations within threshold lay within the 80% range. For yaw estimations, 8.7% were found to have errors greater than 90 degrees, which indicates that some estimates were being “flipped”, i.e., some fruits had their yaws estimated in the opposite direction of their ground truth yaws. Overall, the 3D orientation estimation algorithm, based on its results, especially those for pitch, was deemed suitable for application in the robot green fruit thinning system.

Table 5. Results for 3D orientation estimation applied to the test dataset.

| Pitch Angle | Yaw Angle | |

| Errors within 30 degrees (%) | 93.4 | 84.2 |

| Errors within 45 degrees (%) | 98.4 | 87.6 |

| Errors greater than 90 degrees (%) | N/A | 8.7 |

Results and Discussion for Objective 3

Fifty-seven total trials were conducted. Seventeen were conducted without orientation correction being applied, and 40 were conducted with orientation correction being applied. Results for these trials are shown in Table 6. Before orientation correction, the robotic system was able to reach approximately three-fourths of all target fruit, while being able to encapsulate and remove approximately two-thirds of all target fruit. Orientation correction was able to improve results for both metrics considerably, with an approximately 20% increase for each. Both before and after correction, there was a consistent performance difference of approximately 8% between the reach rate and encapsulation/removal rate. Overall, after orientation correction was applied, the obtained results are deemed to be good for an initial robotic green fruit thinning prototype.

Table 6. Results from robotic green fruit thinning system experiments

| Reach rate (%) | Encapsulation/removal rate (%) | |

| Before correction | 76.5 | 64.7 |

| After correction | 95 | 87.5 |

The following were the key conclusions from this project:

- Compared to pulling, the stem cutting method can avoid damage to a cluster. The developed stem-cutting end-effector removed 90% or more of fruit on the first attempt in each experiment.

- Mask R-CNN for instance segmentation achieved an AP of 83.4% for all green fruit masks, and 91.3% for green fruit masks of sizes greater than 322. Also, 2D PCA-based orientation estimation estimated over 75% of 2D orientations within 30 degrees of ground truth.

- Fruit-stem pairing achieved an average precision of 81.4% on all masks, and 90.6% of masks with assigned orientation labels, and the LSTM-based clustering obtained a clustering success rate of 68.4%.

- Preliminary neural network-based 3D orientation estimation estimated yaw within 30 degrees of ground truth for 84.2% of the time, and pitch within 30 degrees of ground truth for 93.4% of the time.

- By integrating the machine vision system, the stem-cutting end-effector and a UR5e robotic arm, a robotic green fruit thinning system was developed and tested in the orchard, which was able to remove 87.5% of all target fruit.

Overall, the results obtained during this project show the technical viability of a robotic green fruit thinning system. Furthermore, this work serves as foundational for future work in robotic green fruit thinning research. Ideally, all subsequent work will lead to the eventual development of a commercial robotic green fruit thinning system for use in apple orchards. Such a system would help apple growers financially by reducing thinning labor costs and the need for chemical thinning, ultimately making apple production more profitable.

Education & Outreach Activities and Participation Summary

2. Interviewed and mentioned in WQED Pittsburgh's The Growing Field: Future Jobs in Agriculture

Participation Summary:

Throughout the project period, some outreach has been done through the Robotic Green Fruit Thinning Project.

1. Journal publications

Hussain, M., He, L., Schupp, J., Lyons, D. and Heinemann, P., 2023.Green-fruit-detection-and-orientation. Computers and Electronics in Agriculture, 207, p.107734.

Hussain, M., He, L., Schupp, J., Lyons, D. and Heinemann, P., 2024. Green fruit-stem pairing and clustering for machine vision system in robotic thinning of apples. (Ready to submit to Journal of Field Robotics)

2. Webinars, talks and presentations

- Magni Hussain (Presenter), Long He. Green fruit removal dynamics for development of robotic green fruit thinning end-effector. 2023 ASABE Annual International Meeting. 7/9-12/2023. Omaha, NL.

- Magni Hussain (Presenter), Long He, Paul Heinemann. 3D orientation estimation of green fruit for robotic thinning. 2023 NABEC Meeting. 7/31/2023. Guelph, Canada.

- Magni Hussain (Presenter), Long He, Paul Heinemann, and James Schupp. Development of robotic green fruit thinning system for apple orchards. Poster presentation at the 2023 Mid-Atlantic Fruit and Vegetable Grower Convention. 1/31/2023. Hershey, PA. (~100 growers attended)

- Magni Hussain. Robotic Green Fruit Thinning for Apple Production. 2024 Mid-Atlantic Fruit and Vegetable Grower Convention. 2/1/2024. Hershey, PA. (~100 growers attended)

3. Workshop and Field Days

- On September 14, 2022, a brief presentation and demonstration of current progress on the robotic green fruit thinning system was done for about 30 farmers at the Plant Protection Field Day at the Fruit Research and Extension Center in Biglerville, PA.

- On June 6, 2023, an oral presentation and demonstration of current progress on the robotic green fruit thinning system was done for about 25 farmers and other stakeholders at the 2023 Precision Technology Field Day at the Fruit Research and Extension Center in Biglerville, PA.

4. Others

- Mechanical demonstrations of the robotic green fruit thinning system were done at Penn State Ag Progress Days 2022 from August 9-11. Although an exact number of how many farmers/ranchers, agricultural educators, or service providers attended cannot be provided, the number of general attendees interacted with during the event is estimated to be in the hundreds. During the event, the project was featured in two TV interviews by Pennsylvania Cable Network (PCN) and WTAJ.

- The project was interviewed and mentioned in WQED Pittsburgh's The Growing Field: Future Jobs in Agriculture in October 2022.

Project Outcomes

Overall, the results obtained during this project serve as foundational steps in research towards robotic green fruit thinning. While the work done in this project is a good early step towards green fruit thinning automation, there is still more work to be done before commercial application in orchards is feasible. The prototype evaluated in this project was successful in dealing with target fruit in a local cluster. However, the ability of a system to thin green fruit throughout a tree canopy, and ultimately an orchard, with full autonomy is essential for financially-feasible commercial implementation. There are several ways that this could be done, including the implementation of a multi-arm system that could thin several fruit simultaneously, similar to ones that are being in commercial trials for apple harvest. Such systems could be autonomously controlled to act as mobile robots. Furthermore, for larger commercial orchards, several of these systems could run simultaneously and coordinate with each other for efficient thinning. The adoption of apple tree training systems that train the trees into a more 2D structure will also be important for the implementation of robotic systems. Traditional tree structures are 3D in nature, and would require more complex robotic manipulators to properly reach fruits in task automation. 2D structures also help in the detection of green fruit, since occlusion by the tree structure is less likely.

The thinning prototype developed in this project could be integrated with a green fruit yield estimation system. Such a system would drive through an orchard and estimate the current number of fruitlets on each tree. This information can be used to determine the number of fruits to be thinned for each tree, even down to the fruit and cluster level. This setup would be more efficient than having the thinning prototype also perform the job of estimating how much to thin each tree, especially since designated yield estimation systems can determine fruit count per tree in a fast speed. If the yield estimation system provides thinning decision making at cluster resolution, it needs to be ensured the position of each cluster is estimated rather precisely and accurately, so that the thinning system can reliably go to and identify the target cluster and thin it correctly.

This project helped us to learn lots of aspects of robotic solutions for crop load management at green fruit stage. In order to develop a robotic system, we have learned the critical steps we need to take. For example, conducting fruit removal dynamic tests is very important for determining mechanism structure and parameters. Meanwhile, a machine vision system with advanced algorithms is necessary for accurately detect and localized targeted green fruits in a tree canopy. We understood that tree canopy structure and density is a major factor that can affect green fruit detection accuracy. The integrated robotic green fruit thinning achieved the goal of removing targeted green fruits with good successful rate, while the robotic system at current stage is still at very low efficiency. Overall, although our research team developed a few core components for a robotic green fruit thinning system, significant progress is still required towards the development of a more accurate and efficient robot for green fruit thinning. Improving machine vision systems for green fruit detection, pairing and clustering, as well as increasing path planning efficiency under 3D canopy environment should be considered for future studies.