Final report for GNE22-293

Project Information

Crop load management is the single most important yet difficult management strategy that determines the annual profitability of apple orchards. Pollination and thinning are the two aspects that affect greatly on effective crop load management. Environmental conditions interfere with the natural pollination process thus causing huge uncertainty in achieving optimal pollination. Later after the pollination process, to apply appropriate amount of thinning remains a challenge: If thinning is inadequate and too many fruits remain on the tree, fruit size will be small, whilefruit quality will be poor. Over thinning also carries economic perils since yield and crop value the year of application will be reduced and fruit size will be excessively large with reduced fruit quality due to reduced flesh firmness, reduced color and a much-reduced post-harvest life. Thus, in this study, we propose to develop a robotic apple crop load management system to achieve high yield and quality of fruit crops resulting in a substantial economic benefit to the tree fruit industry. The proposed robotic system consists of two major components; i) a well-developed machine vision system that can identify the location of apple flower cluster and king flowers, generate flower density map, and communicate with robotic manipulator automatically; and ii) a spraying system that is mounted on a robotic manipulator to reach target position. It is expected that our prototype and field validation will provide sufficient information for companies to develop and commercialize a robotic crop load management system for the growers.

The primary goal of this proposed project is to develop an autonomous apple crop load management system that is capable of localization of flower clusters and king blossom on apple canopies to perform precision targeted spraying. The project will be focused on the following objectives:

- Establish a flower cluster image dataset throughout the flowering growing stage

The purpose of this objective is to collect adequate dataset of apple flowers for the lateral training and testing for the Deep Learning network. Images will be taken on the Gala and Honeycrisp apple trees during different periods of flowering stages. Two ZED 2 stereo cameras (Stereo Labs) will be used to cover whole tree canopy. The manually-counted number of king blossom will be served as the ground truth to be compared with the results of vision system.

- Flower Cluster Localization and King Blossom Identification

In this objective, a deep learning-based machine vision system will be developed for individual apple flower segmentation. With some further image processing process, separate flower clusters will be localized. King blossom will be identified within each flower cluster.

- Develop a path sequencing algorithm to support the decision making for chemical suspension

An optimized trajectory leads to higher efficiency for the robotic system. The most well-known problem of searching for the optimal sequence is the Traveling Salesman Problem (TSP). The goal of the TSP is to find a minimal-cost cyclic tour through a set of points such that every point is visited once.

- Integrate the vision system and robotic manipulator to perform lab evaluation on precision apple blossom thinning

A UR-5e robotic manipulator will be used to integrate general spraying nozzle along with the vision system developed in previous objective. The manipulator and sensors will be mounted at different locations and orientations in a reconfigurable frame to make it suitable to narrow tree canopy architectures.

Insect pollination has traditionally been the important ecosystem service for the productivity of apples. However, evidence suggests that supplies of pollination services, both from honeybees and wild pollinators, do not match the increasing demands (Ramirez et al., 2013). Due to the Colony Collapse Disorder (CCD), honeybees around the world have been dying off at alarming rates. Also, environmental conditions have a direct effect on when and how insect pollinators are active. Factors include heat, humidity and wind. Even localized micro-climates can have an effect on the work done by these insect pollinators. Under such conditions, farmers around the world constantly face a large number of risks and uncertainties when considering the pollination of their crops.

To minimize these risks, farmers have been always searching for alternative methods to gain more control over the pollination of apples, thus increasing food security and often increasing crop yield. Artificial pollination by applying pollens directly to the flowers is among them. The proposed research is designed to deliver a robotic system to address this challenge by creating a strategy to accurately identify flowers during the blooming period, track flower growth, and finally precisely apply pollens when the flower development is at the right stage.

One of the major challenges in robotic pollination is to develop a robust and accurate blossom detection algorithm that can detect king flowers in varying background and illumination conditions. Deep learning techniques, such as Faster Region-Based Convolutional Neural Network (Faster R-CNN) and Semantic Segmentation Network (SSN), have been proven to be robust, accurate and reliable in various vision-based object detection tasks (Dias et al., 2018), while there are many improvements need to be addressed before they can be integrated for robotic pollination. For example, the algorithm is able to identify the tentative area of blossoms but could not pinpoint exact location. The algorithm also suffers from the false positive detection of flowers for objects such as branches and leaves. This proposed project will focus on developing a Deep Learning-based vision system that precisely identify and locate king flowers in the tree canopies, which lead to efficient and reproducible pollination of apples and thus help increase the yield of high-quality apples.

In addition, thinning is a particular challenge for apple growers among Pennsylvania. Apple blossom thinning is a procedure that has been in practice for hundreds of years. The act of thinning or removal of blossoms is critical to efficient apple tree fruit production. The absence of blossom thinning can result in poor quality fruit, diminished fruit size, breakage of limbs, exhaustion of tree reserves, and ultimately reduction of tree life. The thinning practice is a complex, time sensitive procedure that reduces fruit branch loading, resulting in a higher quality, larger sized product. Currently, hand-thinning is still a necessary and costly practice in apple production, and an incalculable number of crops are lost annually due to lack of labor and the resulting non-thinned crops (NIFA, 2009).

Cooperators

Research

Objective 1: Flower cluster dataset establishment

A series of image acquisitions were conducted in 2022 at Penn State Fruit Research and Extension Center (Biglerville, PA). Gala and Honeycrisp apple varieties were selected for the tests. The test trees were planted in 2014 with tree spacings of 1.5 m (Gala) and 2.0 m (Honeycrisp). These trees were trained in tall spindle canopy architecture with 4 m in height on average (Figure. 3.2). A ZED 2 camera (Stereolabs Inc., San Francisco, CA) was mounted on a frame, which was attached to a Kubota utility vehicle to take images of the tree canopies. The images were taken at the highest available pixel resolution (4416×1242) in such a way that each image contained the entire apple canopy with flowers, which allowed for the comparison of the detection results with manual counts. To obtain good quality images, the distance between the camera and the tree trunks was measured and set at 1.5 m and remained relatively constant for the entire image acquisition process. A total of 20 random trees were selected from each variety. The images were taken at the flowering stages of 20% (king bloom), 40%, 60%, and 80% defined by a pomology expert. The flowering stage was estimated by the following equation: flowering stage= number of opened flowers/5, where 5 is the average number of flowers in a cluster. At each flowering stage, the utility vehicle stopped at each tree and captured images for the targeted canopy. A total of 800 images (10 images for each tree × 20 trees × 4 flowering stages) were collected for each variety for later image processing. The images were taken under natural daylight illumination. Figure 1 shows the image acquisition system and several apple trees used in this work.

Figure 1. Image acquisition system with a ZED camera was mounted on a Kubota utility vehicle.

As indicated earlier, a total of 800 original images were collected for each cultivar throughout the entire flowering stages, e.g., from first pink to full bloom. However, due to the large number of datasets required by the deep learning model training, data augmentation was needed through image pre-processing. Each image was scaled, flipped, cropped, and rotated, which generated four new images for each original image (Figure 2). Therefore, by applying data augmentation, the dataset was enlarged four times, which contained 4,000 images in total.

Figure 2. Image augmentation process to enlarge the dataset, including scaling, flipping, cropping, and rotation.

Objective 2: Flower Cluster Localization and King Blossom Identification

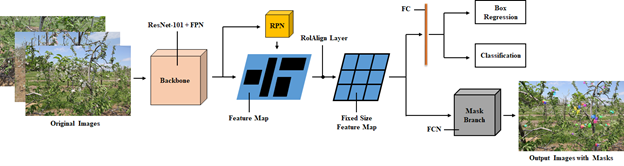

While previous approach on detection of apple flowers implemented semantic segmentation, instance segmentation has become the state-of-art approach in the area of machine vision research. It aims to predict the object class-label and the pixel-specific object instance mask, which identify specific objects within each single class variety (Hafiz & Bhat, 2020). Instance segmentation involves object detection using a box followed by object-box segmentation. One of the most successful approaches regarding object detection task can be Mask R-CNN. Mask R-CNN outperforms the Faster R-CNN detection algorithm using a relatively simple mask predictor which leads to higher accuracy and faster computation. Instance segmentation approaches based on Mask R-CNN have shown outstanding results in recent instance segmentation challenges (Hafiz & Bhat, 2020). Figure 3 illustrates the Mask R-CNN model used for detecting flowers in this study. The output from apple flower segmentation is the colored masks of individual flowers. These masks contain information about each detected flower including the size (number of pixels) and the location (coordinates).

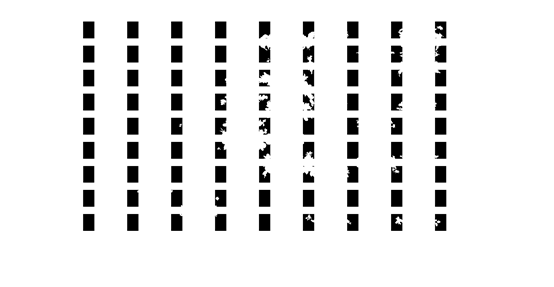

Every fruiting spur on an apple tree produces a cluster of five to six buds: the central blossom is often known as the King Blossom, which is the critical target of precision pollination. In order to precisely identify the King Blossom within each flower cluster, the first step is to separately localize each flower cluster in the apple canopies. In this proposed study, a novel approach of flower cluster identification method is proposed using flower density 2D mapping process. With the output from the Mask R-CNN based instance segmentation described in previous paragraph, the masks of each detected apple flower can be easily separated out from the background as shown in figure 4.

Figure 4. Left: Original apple canopy image. Right: Extracted apple flower masks.

The next step is to divide the image of flower masks into equally-sized super pixels based on the size of the original image. In this application, the size of image is 5184×3456, with the size of super pixel being chosen as 576×384, a total of 81 super pixels were obtained carrying different sections of flower masks as the results shown in Figure 5.

Figure 5. Image of flower masks was divided into 81 super pixels.

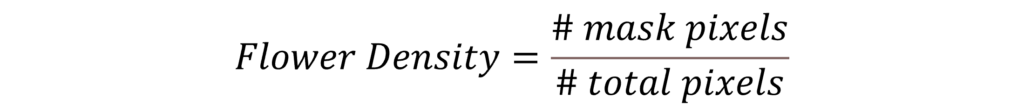

For each individual super pixel, the flower density is calculated as the percentage of flower mask pixels (white-colored pixels) over the total pixels, which is shown in the following equation:

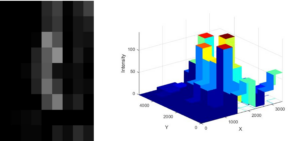

Based on the calculated density value within each super pixel, a colormap is assigned to present whether the super pixel contains more flower mask pixels. The brighter the color is, more flower mask pixels are concentrated in the super pixel. A flower density mapping sample is shown in Figure 6.

Figure 6. Left: Apple flower density 2D mapping. Right: Apple flower density 3D mesh

Since flower clusters are more likely to be located at the place where has higher flower density than the surroundings, this algorithm is coded to output the coordinates of those brighter super pixels which represent the location of flower cluster with higher probability. With a further step, the density map is converted into a 3D mesh map, in Figure 6, for a clearer view of each possible flower cluster locations. Each output coordinate is treated as the center of a circle with a fixed radius. With appropriately chosen radius, the flowers included within each circle are considered as the detected flower cluster. From each separated flower cluster, the flower that located at the most centered position is considered as the king blossom in that cluster. The image dataset acquired in the Method 1 were used to train and evaluate the flower cluster detection and king flower identification algorithms.

Objective 3: Develop a path sequencing algorithm to support the decision making for chemical suspension

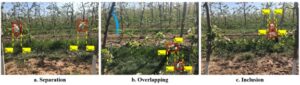

The output of the detection model was pixel-level masks of flower clusters with a bounding box (Figure 7). The x-coordinates of each bounding box were extracted to represent the location of each flower cluster in the horizontal direction. The position of flower clusters within each window of the trellis wire was treated as a sequence of paired x-coordinates (xmin,i, xmax,i), where xmin,i was the starting position of the flower cluster, xmax,i represented the ending position, and i indicated the number of flower clusters that were detected in an image. Before the sequence of x-coordinates were sent to the cartesian manipulator, a decision-making algorithm was used to address two special situations regarding the flower clusters position: overlapping and inclusion.

Figure 7. Illustration of relative position between clusters. From left to right: non-overlapping clusters, overlapping clusters and occluded clusters.

The final coordinates of each flower clusters were sent to a cartesian robotic system (introduced in Objective 4) as the target for moving the manipulator after localizing all flower clusters (and superclusters) in an image. The cartesian robotic system was capable of moving in three orthogonal axes, X, Y, and Z with three individual linear actuators being mounted along each direction. A chemical spraying system, including a solenoid valve and a flat-shaped spraying nozzle tip, was mounted at the front arm of the cartesian robotic system as the end-effector. The AIXR TeeJet flat spray nozzle offers a spray angle of 110 degrees, providing a fan-shaped coverage width of 30 centimeters at a distance of one meter from the target surface. The size of the droplet is in the level of Coarse (C) with a diameter of 340 micrometers.

Using the output coordinates from the vision system, the cartesian robotic system moved the end-effector to the targeted flower clusters within a range of 90 cm. Since the trees in the test field were trained to horizontal branches with trellis wire support, most of the flower clusters were located within 15 centimeters along the wires. However, the distance between the robotic system and the apple tree trunks was kept constant at ~60 cm. Therefore, the robotic system was moved with fixed positions in the Y (vertical) and Z (distance to the tree trunk) directions, and the movement of the nozzles was only in the X direction during spray, which was along the trellis wires.

Objective 4: Integrate the vision system and robotic manipulator to perform field evaluation (chemical thinning as an example)

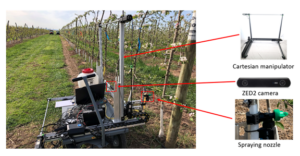

A cartesian robotic system (Figure 8) was designed for precision apple blossom thinning consisting of three major components: 1) a color stereo camera (ZED2, Stereolabs Inc., San Francisco, CA) based machine vision system, 2) a cartesian system-based manipulator, and 3) a spraying end-effector with a solenoid valve (55295-1-12, TeeJet Technologies, Glendale Heights, IL) and flat-shaped nozzle (AIXR11003, TeeJet Technologies, Glendale Heights, IL), as shown in Figure 8. The entire system was placed on a utility cart that could be moved manually along the tree rows. A four-gallon battery-powered backpack sprayer (Chapin 63985, Chapin International Inc., Batavia, NY) filled with chemical thinner (Lime Sulfur) was also placed on the cart. The solenoid valve and nozzle were mounted at the end of the cartesian manipulator and connected to the sprayer with a chemical-resistant soft plastic tube.

Figure 8. Overall description of the cartesian robotic sprayer and its three major components.

The vision system and the end-effector were integrated with the cartesian spraying system. The system was tested on the trellis-trained Fuji apple trees in Biglerville, PA. The robotic spraying system was compared with two conventional spraying approaches to verify and assess the performance for precision apple blossom thinning: boom-type sprayer and air-blast sprayer (Figure 9). The boom-type sprayer consisted of a six-foot-long plastic pole with seven nozzles. The nozzles were manually controlled using the Arduino MEGA microcontroller as a switch. The boom-type sprayer was mounted at the back of a utility vehicle (Polaris Range EV, Polaris Inc, Medina, MN) driven at 1.3 m/s during spraying. The air-blast sprayer is an intelligent sprayer equipped with a 150-gallon tank and a LiDAR sensor-based smart spraying kit (Smart Apply, Inc., Indianapolis, IN). The distance between the trellis wire and the ZED2 camera remained at 60 cm to satisfy the preset parameters in the robotic sprayer vision system throughout the experiment.

Figure 9. Spraying systems used in the test. Left: Cartesian target sprayer (CTS); middle: boom-type sprayer (BTS); right: air-blast sprayer (ABS)

The total chemical usage of the CTS was recorded by doubling the real usage because it only covered two sections, which was half of an entire apple tree. The chemical usage for both the BTS and ABS were measured from the sprayer tanks. Two weeks after the treatments, the number of green fruit set was counted for every limb of each experimental unit. The number of green fruits per cluster was also recorded to determine the effectiveness of chemical thinning within each cluster.

In 2024 season, a UGV-based robotic system was designed for precision apple blossom thinning, which consisted of four major components (Figure 10): i) a color stereo camera (ZED2, Stereolabs Inc., San Francisco, CA) based machine vision system; ii) a Husky A200 unmanned ground vehicle (Clearpath Robotics, Inc., Waterloo, Canada); iii) a spraying end-effector with a solenoid valve (55295-1-12, TeeJet Technologies, Glendale Heights, IL) and flat-shaped nozzle (AIXR11003, TeeJet Technologies, Glendale Heights, IL); and iv) a set of RTK GPS (Inertial Labs, Paeonian Springs, VA). The ZED2 stereo camera was mounted on the side of the aluminum frame at a height of 70 cm, positioned approximately 100 cm from the tree trunk. This setup ensured full coverage of its field of view at each specific trellis wire window of the canopy.

Figure 10. Unmanned Ground Vehicle-Based Chemical Blossom Thinning System

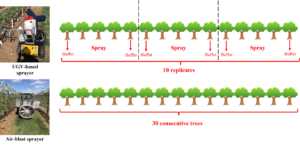

Both the UGV-based target sprayer (UTS) and air-blast sprayer (ABS) were tested on the same day for precision apple blossom thinning. Furthermore, results of the prior year's cartesian target sprayer (CTS) experiments were integrated into the analysis, as the experimental design remained consistent. For both UTS and CTS experiments, three consecutive trees were selected as one experiment unit, and 10 contiguous replicates were used for each treatment (Figure 11). Two additional buffer trees were included at the beginning and the end of each experiment unit to prevent chemical deposition from other treatments. A skilled technician operated the tractor as it traversed a stretch of 30 consecutive trees for the ABS system. Another 30 trees left in the row were used for an un-thinned control (UTC) to compare the effectiveness of thinning.

Figure 11. UGV-based chemical blossom thinning system experiment design

Objective 1 & 2: Flower Cluster Detection with the Developed Machine Vision System

Figure 10 shows an example of the detection results obtained with the developed Mask R-CNN with instance segmentation approach. The original output of the instance segmentation model contained pixel-leveled masks together with bounding box indicating that flower clusters were detected. In this study, since the most critical information needed from the vision system was the pair of x-coordinates of each flower cluster, which can be directly extracted from the bounding box, the visualization code was modified so that it only showed the bounding box in output images as shown in Figure 12.

Figure 12. Mask R-CNN based instance segmentation of apple flower clusters

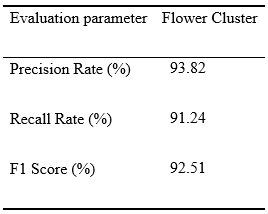

The performance of developed flower clusters detection model was satisfying. Based on the Precision, Recall, and F1 score, along with field test observation, most of the flower clusters were correctly detected. The recorded data showed that there were 216 flower clusters counted in total on the 18 sample trees for CTS. The quantitative output from the vision system reported that 201 of them were detected, which led to an accuracy of 93.06%. An accurate vision system helps the overall system to locate the spraying targets more precisely and therefore lead to the better performance of blossom thinning.

Table 1. Precision, Recall, and F1-score for flower clusters detection model

Objective 3 & 4: Robotic Spraying System Integration and Field Evaluation

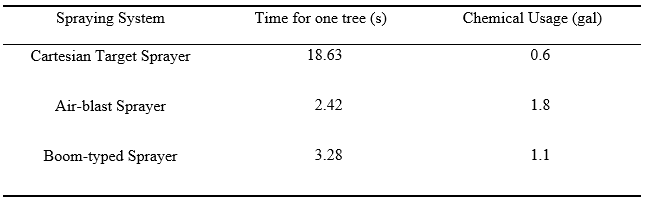

The proposed cartesian target sprayer (CTS) was compared with an air-blast sprayer (ABS) and a boom-typed sprayer (BTS) in the application of apple blossom thinning. Three measurements were recorded during the field test: time used for spraying, usage of chemical (lime-sulfur), and the number of green fruit set before June Drop. It should be noted that the Cartesian target sprayer took 4.66 s to finish spraying one out of four sections of trellis wire apple trees. To unify all the data for comparison, the recorded time for Cartesian target sprayer was multiplied by four to estimate the total time it took to complete the spraying for the whole tree. It can be recognized from Table 2 that the processing time was not the advantage of the proposed spraying system. The Cartesian target spraying was six to nine times slower than boom-typed sprayer and air-blast sprayer. The main reason for the slowness was because the speed of the linear actuators on the Cartesian manipulator was set to one mph, while the driving speed of boom-typed sprayer and air-blast sprayer were around 3-3.5 mph. Another significant reason was the processing route: After finishing spraying for one limb, the Cartesian manipulator was required to return back to its home position before it can start reaching the next target limb, which doubled the distance the manipulator need to travel.

Despite of CTS’s longer processing time, it did an outstanding work to save the use of chemical thinner. As shown in the 3rd column of Table 2, the amount of chemical sprayer was decreased by 66.7% and 45.5% compared to ABS and BTS respectively.

Table 2. Comparison of Three Different Sprayers for the spraying time and chemical usage

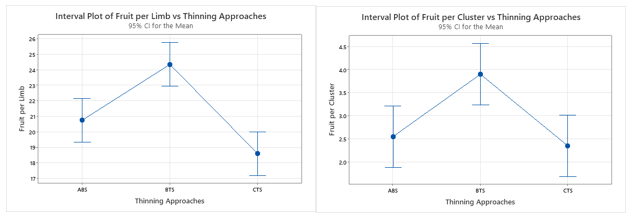

Another major metric to assess the effectiveness of thinning was the number of green fruit set before June Drop. From the ANOVA analysis image in Figure 13, it can not be said that the number of green fruits on the tree thinned by CTS was significantly less than the other two treatments, but it was clear that the average number of green fruits was the least among these three treatments. The Cartesian target sprayer was able to achieve relatively the same effectiveness of thinning while reducing the usage of chemical thinner by 45.5% to 66.7%.

Figure 13. One-way ANOVA mean analysis of green fruit counting among three sprayers

Another interesting fact was found during the manual green fruits counting. Among the sample trees for the Cartesian target sprayer, researchers observed a few clusters that contain five to six green fruits, which meant no thinning was applied. The reason of that is all of these un-thinned clusters located at the back of the trellis wire, the Cartesian target sprayer was not able to either detect the flower clusters that were hidden or reach those locations with the current mechanical design. Such shortcoming needs urgent solutions to improve the robustness of the overall system.

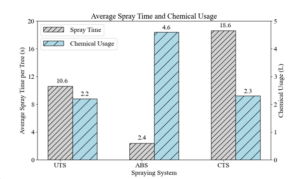

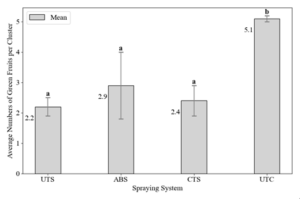

For the unmanned ground vehicle based spraying system (UGV), a set of field experiment was carried out, and the performance was compared with airblast sprayer (ABS) and the cartesian spraying system (CTS) developed earlier. The results show in Figures 14 and 15.

Figure 14. Comparison of average spray time for different spraying systems

Figure 15. Comparison of average green fruits remained within a cluster under different spraying systems

The UGV-based target sprayer was observed to reduce chemical usage by 66.7% compared to the air-blast sprayer. Conversely, the air-blast sprayer, with its rapid spray time of 2.4 seconds per tree, was the fastest sprayer to complete the thinning process. However, it sprayed excessive chemicals (4.6 L) which might lead to fruit russeting and environmental pollution. The UGV based target sprayer increased 43.0% in operational speed by comparing to the cartesian sprayer. The cartesian target sprayer was the slowest among the three spray systems, which mainly caused by the slow speed of linear actuators, which limited its applicability to commercial operations. Another important metric used to assess the effectiveness of chemical thinning was the number of green fruits set before June drop. As shown in Figure 11, the average number of fruits per cluster was 2.2, 2.9, 2.4, and 5.1 for the UTS, ABS, CTS, and UTC respectively. Compared to the UTC, all three spraying systems showed a significantly better thinning effectiveness as indicated by the lower number of fruit sets. From the ANOVA analysis, there was no significant difference in the number of green fruits among UTS, ABS, and CTS while all three thinning approaches obtained a significantly lower fruit set compared to UTC.

In this project, a real-time vision system was developed to tailor specifically for the detection and precise localization of apple flowers and clusters in an orchard environment. The state-of-the-art Mask R-CNN instance segmentation technology was adopted to achieve high detection accuracy. This deep learning-based apple flower detection model was trained on datasets of apple orchard imagery, enabling it to distinguish individual apple blossoms from foliage with acceptable accuracy. The practical development of this real-time vision system is one of the keystones to the precision apple blossom thinning system. By continuously monitoring the orchard's blossoms, growers gain real-time insights into the flowering patterns of their apple trees, and then use it for making informed decisions on crop load management. To achieve the targeted precision chemical thinning, the real-time vision system was firstly integrated with a highly adaptable Cartesian robotic manipulator. This integration focused on the fusion of computer vision and robotics to perform targeted and highly accurate chemical thinning of apple blossoms. It not only identifies individual apple blossoms but also merges consecutive flower clusters into one single “super” flower cluster to improve thinning efficiency. This targeted spraying reduces chemical usage while remaining effective of thinning operations. Later, recognizing the importance of precision agricultural technologies and the increasing demand for autonomous farming practices, the developed vision and robotic systems was integrated with a Husky A200 Unmanned Ground Vehicle (UGV). This integration represented another step toward achieving a fully autonomous system in orchard management. It not only identifies apple blossoms but also directs the robotic manipulator to perform chemical thinning operations autonomously. This approach provides the opportunity to reduce the need for human labor in large-scale operations. Furthermore, the integration of these technologies onto the UGV not only increases operational efficiency but also enhances safety by minimizing human exposure to chemicals, thereby promoting safer and more sustainable agricultural practices. The outcomes of this study provided a basis for developing an automatic precision spraying system for apple blossom thinning, which could benefit the apple industry economically and environmentally.

Education & outreach activities and participation summary

2. The project was interviewed and mentioned in WQED Pittsburgh's The Growing Field: Future Jobs in Agriculture in October 2022.

Participation summary:

Throughout the project period, some education and outreach activities were conducted and listed below:

1. Journal publications

- Mu, X., He, L., Heinemann, P., Schupp, J., Karkee, M. and Zhu, M. 2024. UGV-based precision spraying system for chemical apple blossom thinning on trellis trained canopies. Journal of Field Robotics, 2024, 1-12.

- Mu, X., He, L., Heinemann, P., Schupp, J. and Karkee, M. 2023. Mask R-CNN based apple flower detection and king flower identification for precision pollination. Smart Agricultural Technology, 4, p.100151.

- Mu, X., Hussain, M., He, L., Heinemann, P., Schupp, J., Karkee, M. and Zhu, M. 2023. An advanced robotic system for precision chemical thinning of apple blossoms. Journal of the ASABE, 66(5), 1125-1134.

2. Webinars, talks and presentations

- Xinyang Mu (Presenter), Long He. An UGV-based Precision Spraying System for Chemical Apple Blossom Thinning on Trellis Trained Canopies. 2023 ASABE Annual International Meeting. 7/9-12/2023. Omaha, NL.

- Xinyang Mu (Presenter), Long He. An Advanced Cartesian Robotic System for Precision Apple Blossom Thinning. 2022 ASABE Annual International Meeting. 7/18-20/2022. Huston, TX.

- Xinyang Mu (Presenter), Long He. Mask R-CNN Based King Flowers Identification for Precision Apple Pollination. 2022 Mid-Atlantic Fruit and Vegetable Grower Convention. 2/2/2022. Hershey, PA.

- Xinyang Mu (Poster Presenter), Long He. Mask R-CNN Based King Flowers Identification for Precision Apple Pollination. 2022 Mid-Atlantic Fruit and Vegetable Grower Convention. 2/2/2022. Hershey, PA.

3. Workshop and Field Days

- On September 14, 2022, introduction and demonstration of current progress on the apple flower cluster detection and precision blossom thinning was done for about 30 farmers at the Plant Protection Field Day at the Fruit Research and Extension Center in Biglerville, PA.

- On June 6, 2023, introduction and demonstration of current progress on the robotic precision spraying system for apple blossom thinning was done for about 25 farmers and 5 ag service providers at the 2023 Precision Technology Field Day at the Fruit Research and Extension Center in Biglerville, PA.

4. Others

- The outcomes of the project were mentioned in many different presentations by the PIs to various audiences in 2024.

- The developed robotic chemical blossom thinning system was demonstrated at Penn State Ag Progress Days 2022 from August 9-11. In total, more than 100 growers and general public were reached out.

- The project was interviewed and mentioned in WQED Pittsburgh's The Growing Field: Future Jobs in Agriculture in October 2022.

Project Outcomes

Blossom thinning is one of the key steps in apple crop load management that improves the quality of apples, reduces stress on the trees, and avoids the likelihood of biennial bearing. Conventional chemical blossom thinning such as air-blast spraying can lead to excessive use of chemical thinner to ensure full coverage, which can also cause leaf damage fruit russeting. In addition, a well-trained operator is required to use these chemical spraying systems. To address these challenges, we developed an unmanned ground vehicle (UGV)-based precision spraying system which can automatically and precisely spray chemicals to the targeted flower clusters. The spraying system includes a machine vision system to detect and localize flower clusters in tree canopies, a targeted chemical spraying system that can precisely apply chemicals to the desired locations, and a mobile robot system to carry them for continuous operating in the orchards. We have presented and demonstrated the system to growers and stakeholders at different extension events. Meanwhile, we have presented our research outcomes to the scientific community through different professional meetings, and publications in scientific journals.

There are a few major contributions for this study that can potentially benefit farmers and society. Firstly, a machine vision system to automatically detect flower clusters using a Mask R-CNN-based instance segmentation. A high precision score of 93.8% was obtained for the flower cluster detection, which is a very important step for the effectiveness of the entire robotic system. Then a precise nozzle control system was developed to execute the chemical very precisely to the locations determined by the machine vision system. Thirdly, an unmanned ground vehicle integrated with RTK GPS was used for driving the spraying system continuously in orchards. With a series of field test, the results indicated that the newly developed UGV-based target spraying system reduced the chemical thinner usage by 66.7% compared to the conventional air-blast sprayer, which potentially reduces production cost while minimizing environmental impact. Due to the high accuracy of flower cluster detection and geo-referencing of the detected flowers, the thinning system achieved an average green fruit set of 2.2 fruits per cluster, which is comparable to the conventional method using air blast sprayers.

In summary, this project successfully developed a robotic and precise chemical blossom thinning system for apple crop load management. The outcomes of this study provided a basis for developing an automatic precision spraying system for apple blossom thinning, which could benefit the apple industry economically and environmentally. Furthermore, the outcomes from this study could be potentially expanded for crop load management for other crops and beyond.

This project provided us a great opportunity to work on an important challenge that the tree fruit industry is facing today, namely how to effectively conduct crop load management in orchards. It helped us to learn lots of aspects of using precision technologies for tree fruit crop load management in overall. In order to successfully achieve precision crop load management at flower stage, machine vision system, nozzle control, mobile robot navigation, and their coordination are essential steps. We have worked on each of these steps with developing different prototypes and algorithms to come up with an optimal solution. For chemical blossom thinning, saving chemical (i.e., lime sulfur) not only reduce the cost, but also minimize the environmental impact. The overall system is expected to increase the profitability of the apple production and maintain the sustainability of the apple industry. Even though this project only focused on blossom thinning in apples, the technology is expected to be applicable, with minimal modification, to other tree fruit crops, thus benefiting a large number of additional specialty crop producers. In addition, particularly sensing technologies for flower cluster detection and localization, and manipulation technology for targeted spraying end-effector may benefit a range of other applications that rely on robotics in production agriculture and may extend to other cropping systems as well. Therefore, the proposed study is highly relevant and timely with a potential to make a huge positive impact on the competitiveness and economic and social sustainability of the overall tree fruit industry in the country.

Meanwhile, through the project, we also found out some limitations that we can improve in the future. For example, 1) the flower cluster detection model can be further improved. The Vision Transformers (ViT) offers a more streamlined inference process and can achieve higher detection accuracy by capturing global context and relationships between objects. 2) a navigation system programed and mapped in Robot Operation System (ROS) need to be installed to enable the spraying system to autonomously navigate through the environment, accurately reach target areas, and perform spraying tasks efficiently without human intervention. 3) additional degrees of freedom (DoF) need to be considered for the manipulator to address the complex structure of apple trees in commercial orchard.

Information Products

- An UGV-based Precision Spraying System for Chemical Apple Blossom Thinning on Trellis Trained Canopies

- Mask R-CNN Based King Flowers Identification for Precision Apple Pollination

- Mask R-CNN Based King Flowers Identification for Precision Apple Pollination

- An Advanced Cartesian Robotic System for Precision Chemical Thinning of Apple Blossoms

- UGV-Based Precision Spraying System for Chemical Apple Blossom Thinning on Trellis Trained Canopies