Progress report for GS24-316

Project Information

In the United States, onion farming predominantly relies on the transplanting of onions, as seeded onions are highly vulnerable to the spread of weeds. Growers are compelled to opt for transplanting despite the high costs, due to the absence of organic and reliable weeding practices. Weeds compete for vital resources in the soil and attract insects and diseases, which can severely impact crop health and yield. Traditionally, weed management has relied on manual weeding and the application of chemical herbicides. Manual weeding is labor-intensive, expensive, and often harms the crops it is meant to protect. Conversely, while effective at controlling weeds, the use of herbicides introduces environmental risks such as soil and water contamination, damage to non-target species, and the development of herbicide-resistant weed populations. As demand grows for organic and sustainable farming, chemical weed control is becoming less viable. In response to these challenges, this research introduces an autonomous weeding robot system designed specifically for onions, utilizing laser technology to precisely target and eliminate weeds. This robotic system has three main objectives. First, to accurately detect weed plants in onion crops in variable lighting conditions. Second, to autonomously eliminate weeds using a robotic manipulator equipped with a laser. Third, to perform thorough controlled environment and field evaluations. This system combines robotics with laser technology, providing an efficient and eco-friendly weeding solution that boosts crop yields without the need for manual labor or chemical herbicides.

The main aim of this study is to develop an autonomous robotic manipulator equipped with a CO2 laser as the end effector, which can move in all directions to eliminate weeds using artificial intelligence. The development of the proposed system will focus on the following objectives.

-

Develop a real-time weed detection system for onion fields using RGB and depth data

A detection system will be developed using a stereo vision camera capable of simultaneously capturing high-resolution RGB images and depth data. This camera will provide detailed environmental information, including precise depth measurements and accurate 3D mapping of the field. The RGB data will enable effective differentiation between crops and weeds based on color and textural differences, while the depth data will help localize the weeds precisely within the 3D space. Both RGB and depth data will be transmitted in real-time to the onboard NVIDIA Orin edge computer. The edge computer will process the multimodal data using a single-shot transformer-based computer vision model, specifically trained to detect and localize multiple weed species within onion crops. The goal is to accurately identify weeds and estimate their positions in real-time within the camera’s field of view.

-

Precision targetting of a high-power diode laser using a delta parallel robot for laser-based weed control

The robotic manipulator will be implemented as a Clavel-based delta robot with three degrees of freedom (DoF) for precise laser targeting. This configuration provides the necessary flexibility, high speed, and positional accuracy required for rapid laser positioning. Control algorithms will be developed to autonomously align the laser to detected weed locations. These algorithms involve accurately determining joint positions, computing inverse kinematics to identify required joint angles, and planning precise motion trajectories.

-

System Integration and Validation

After developing the subsystems of the proposed robotic system, they will be integrated to ensure coherent and seamless functionality of the laser weeder. This integration involves harmonizing the operations of the detection system, the movement of the robotic manipulator, and the actuation of the laser system. The first goal is to minimize the latency between the real-time detection of weeds and the alignment of the laser beam on the target weed plant. The second goal is to enhance the accuracy of the system, enabling it to eliminate weeds with minimal disruption to the healthy vegetation. Once the integrated functionality of the entire system is achieved, the system will be evaluated thoroughly in controlled environment and field conditions. This would involve validating system’s performance in terms of speed and accuracy in as challenging and diverse conditions as possible.

Cooperators

- (Researcher)

- (Researcher)

- (Researcher)

Research

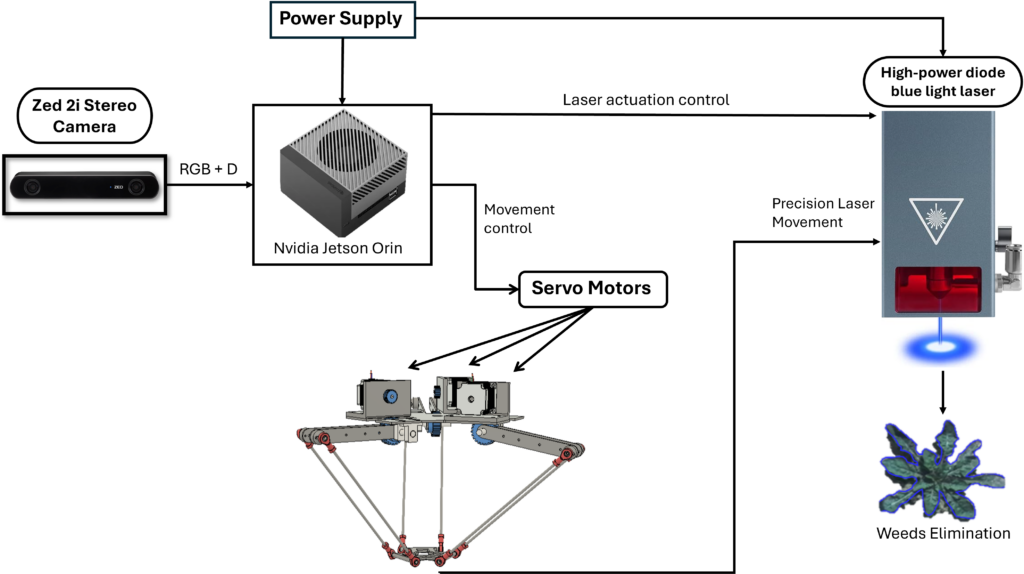

The development of the proposed robotic laser weeder will be done in three distinct stages. Each stage corresponds to the development of a sub-system or functionality within the overall system. Figure 1 illustrates the complete functionality of the system, highlighting each individual high-level component involved.

Objective 1: Develop a real-time weed detection system for onion fields using RGB and depth data

Onion Field Data Acquisition

The data collection phase involved four visits to the University of Georgia (UGA) Vidalia Onion and Vegetable Research Center in Lyons, GA. The onion planting at UGA Vidalia Research Center occurred in November, and at the time of data collection visits, the plants had reached the 3-5 true leaf stage. This growth stage was specifically selected because it coincided with the weeds reaching their mature vegetative phase.

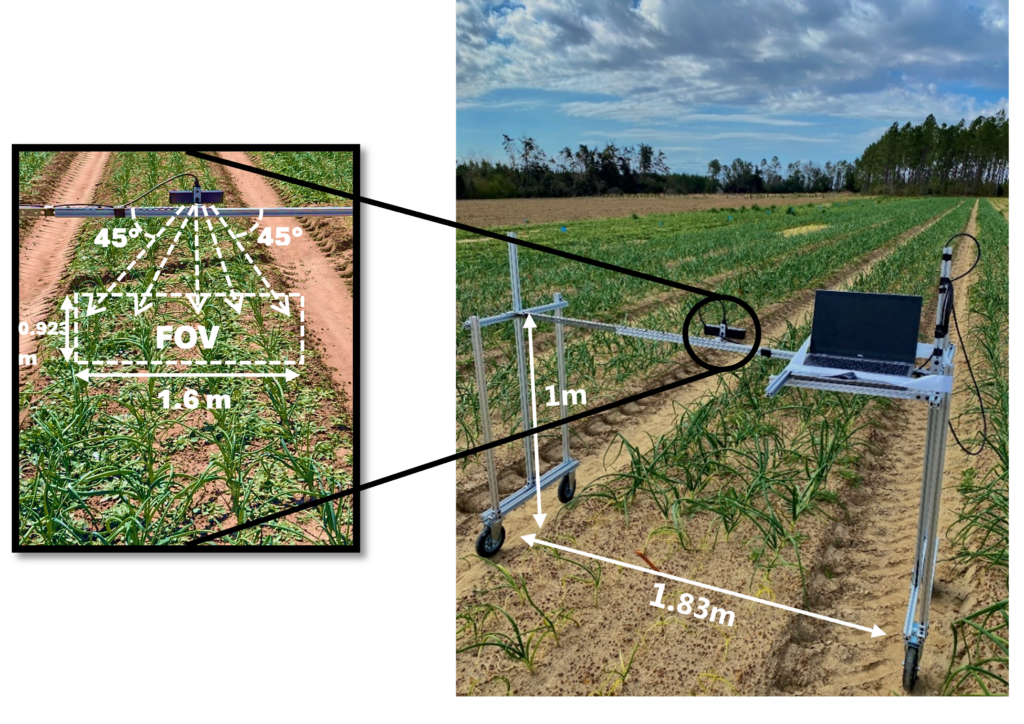

For data collection, a Zed 2i stereo vision camera by Sterolabs was used which provides high-resolution RGB and depth imagery with up to 2K resolution, recording videos at 15 frames per second (fps). It also offers a depth accuracy of approximately 0.1 mm, which will be useful in finding the location of weeds in 3D space. A mobile data collection system was developed by assembling a structure with T-slotted aluminum frames supported by four caster off-road wheels (Figure 2). The width of the system was kept at 6 feet to match the inter-row spacing of the Vidalia onion fields. The mobile system helped maintain the camera's height and orientation consistently, ensuring maximum stability and ease of mobility.

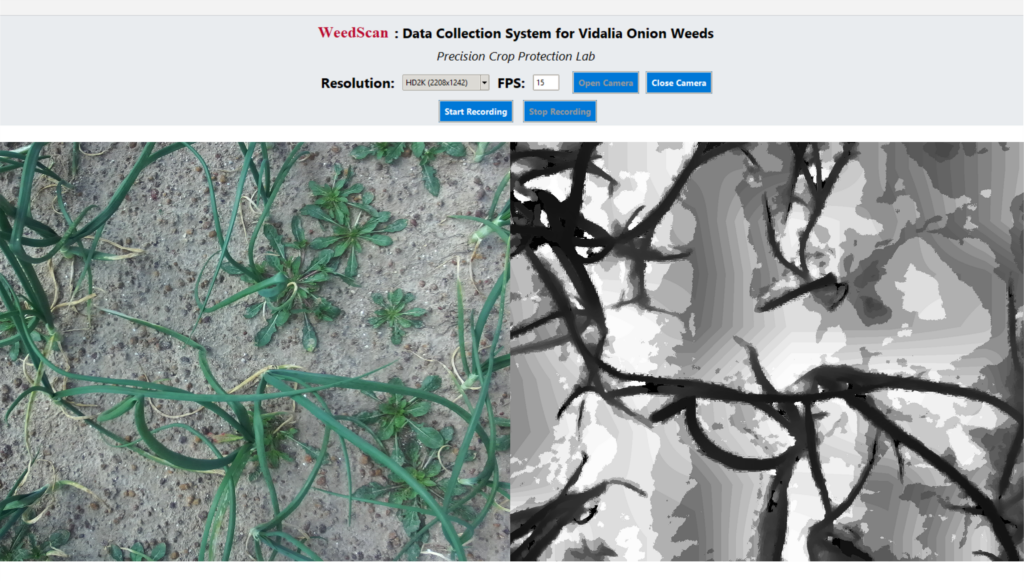

A graphical user interface (GUI) application was developed using Python and the Tkinter framework to streamline the operation of the ZED 2i stereo vision camera during field data collection. This application enabled real-time control of camera settings and recording functions, including resolution and frame rate. The interface integrated the ZED SDK for hardware control and image acquisition. Figure 3 shows live visualization of RGB and depth streams in the GUI app which helps real-time monitoring of the data quality. Audio feedback signals were also integrated to signal the start and stop of recording sessions for better data capturing in field conditions.

During data collection, continuous video recordings were acquired for each onion row instead of capturing individual static images. This approach enabled uninterrupted spatial and temporal data collection, reducing information loss between frames and ensuring consistent visual coverage across the entire row. With static image data collection, each image required approximately 15 seconds for proper alignment, focus, and recording, including system stabilization. In contrast, video captured at 15 fps results in an improvement of time efficiency by approximately 95%. From the recorded videos, individual frames containing both RGB and corresponding depth information were extracted to train the model.

Data Preprocessing

For video handling and data preservation, FFmpeg (Fast Forward Moving Picture Experts Group) was used to ensure that the depth and RGB data recorded by the Zed 2i camera were saved in their original raw format. FFmpeg is an open-source multimedia framework widely used for recording, converting, and streaming audio and video. It was selected over OpenCV due to its superior performance in handling high-resolution video files and preserving critical metadata. FFmpeg supports direct access to MKV-formatted files recorded by the Zed 2i camera without introducing additional compression or frame drops. Unlike OpenCV, which compresses depth data into 8-bit format during decoding, FFmpeg preserves the depth stream in its native 16-bit "gray16le" format. This format ensures that no quantization error or depth value distortion occurs during processing. Frames were extracted at a fixed sampling rate of 2.3 fps, with a 66% overlap between two consecutive camera fields of view (FOVs).

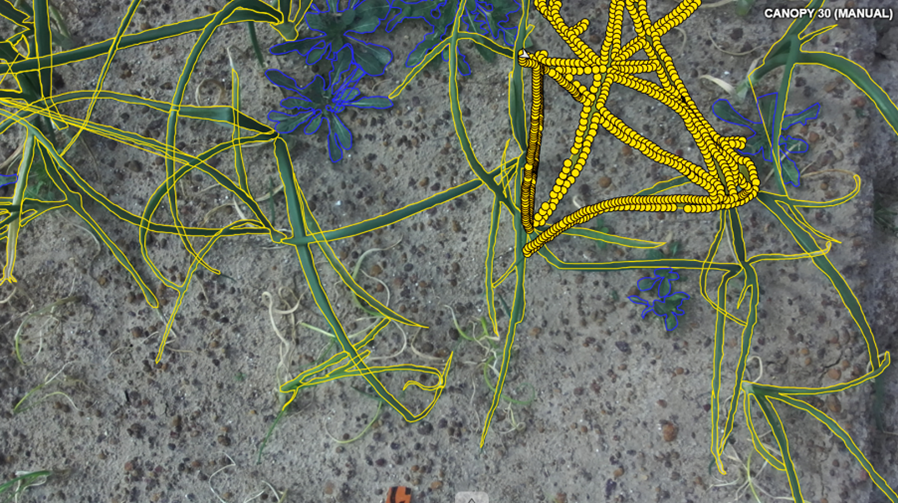

For data annotation, the CVAT (Computer Vision Annotation Tool) platform was used to label three distinct classes: "weed," "canopy," and "weed_cluster." The "weed" class included all non-crop plant species present within the onion rows, while the "canopy" class referred to the visible above-ground portion of Vidalia onion plants. The "weed_cluster" class was used for dense regions where multiple weeds overlapped and could not be clearly separated. Figure 4 shows an example of the annotated regions. A total of 100 annotations were manually created on selected frames extracted from the RGB video data.

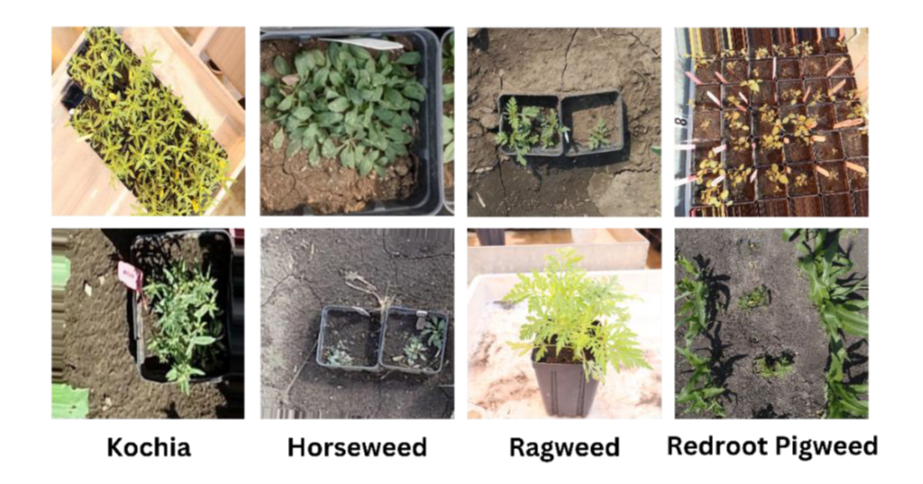

Data Processing

As a starting point for the weed detection system, a comparative analysis of state-of-the-art deep learning models was conducted using publicly available weed datasets. The study evaluated single -shot object detection models including YOLOv9, YOLOv8, RT-DETR, and YOLO-World on two open-source datasets. Dataset A, developed by Rai et al. (2023), contained 3,975 RGB images of four common North Dakota weed species: kochia, common ragweed, horseweed, and redroot pigweed, as shown in figure 5. Images were captured using Canon 90D cameras and DJI Phantom 4 Pro drones in both greenhouse and field settings. The dataset includes over 7,700 annotated weed instances, providing strong spatial and temporal diversity for training. Dataset B, introduced by Sudars et al. (2020), consisted of 1,118 RGB images captured in greenhouse and field environments in Latvia using Intel RealSense D435, Canon EOS 800D, and Sony W800 cameras. It includes 7,853 annotated instances spanning eight weed species and six food crops, with varied growth stages and resolutions to reflect realistic field conditions. The combination of these two datasets enabled a robust evaluation of model generalization and detection accuracy under varying lighting, crop density, and background complexity.

Objective 1:

Four deep learning models including Yolov8, Yolov9, Yolo-World and RT-DETR were evaluated for weed detection performance. Among them, YOLOv9 achieved the highest accuracy, with a mean Average Precision (mAP@50) of 0.94 on Dataset A and 0.85 on Dataset B. Figure 6 shows the inference samples of Yolov9 on the test dataset. YOLOv8 and RT-DETR followed with slightly lower performance, while YOLO-World showed the lowest accuracy across both datasets. YOLOv9 maintained precision and recall values above 0.80 across all weed classes after 60 training epochs. The F1-confidence analysis also showed consistently high F1 scores across a wide confidence range, with the model performing particularly well on kochia and ragweed classes.

In terms of inference speed, YOLOv9 operated at 6 milliseconds per frame, significantly faster than RT-DETR at 18 milliseconds and YOLO-World at 11 milliseconds. Although RT-DETR recorded a lower cross-entropy loss, its higher number of parameters and computational load reduced its practicality for real-time use. Dataset quality also influenced performance. Dataset A offered cleaner annotations but lower image resolution, while Dataset B provided diverse crop-weed classes but included duplicate and lower-quality images. YOLOv9’s superior performance across both datasets highlighted its practical application for real-time weed detection.

Discussion:

The combination of field-specific data acquisition, careful preprocessing, and early model benchmarking has provided critical insights into both system requirements and detection capabilities. With Zed 2i stereo vision camera we were able to collect high-resolution RGB and depth video data under realistic field conditions. Capturing video instead of still images significantly improved data collection efficiency while maintaining spatial and temporal consistency. Careful manual annotation using CVAT allowed for the creation of high-quality labeled data which will provide better training results in the future. Initial evaluations using open-source datasets provided a benchmark for model performance and helped define key hardware, software, and model architecture requirements for field-ready deployment. More importantly, the evaluation of model performance across variable datasets highlighted the importance of training on application-specific data to maximize generalization and precision in real environments.

Conclusion:

The progress made under Objective 1 demonstrates a strong foundation for developing a real-time weed detection system tailored to Vidalia onion fields. The successful collection, preprocessing, and annotation of RGB-D data from the UGA Vidalia Onion and Vegetable Research Center helped establish a high-quality, application-specific dataset. Benchmark testing using open-source datasets confirmed YOLOv9 as the most promising detection model. Future work will focus on adapting the selected model to utilize both RGB and depth modalities for improved detection and localization. Once the model is fine-tuned, it will be trained and evaluated using the annotated Vidalia onion dataset. The ultimate outcome of this objective is to develop a real-time weed detection system capable of identifying weeds along with their precise location for targeted laser-based elimination.

Educational & Outreach Activities

Participation summary:

I will present at the ASABE AIM 2025 under the ITSC – Information Technology, Sensors & Control Systems community, in two technical sessions: Robotics and AI-Enabled Robotics for Production Agriculture and Machine Vision for Data-Driven Crop Management.

Project Outcomes

As this was the first year of the project, the majority of efforts were focused on research development and the dissemination of findings through presentations at scientific conferences. In the second year, we aim to advance system deployment and achieve the proposed project outcomes.

Information Products

- Performance Evaluation of Single-Shot Detection Models for Weed Identification on Open-Source Datasets

- A Digital Twin-Enabled Approach for Precision Weed Management in Specialty Crops using a 4-DoF Robotic System

- Enhanced Weed Detection Using YOLOv9 on Open-Source Datasets for Precise Weed Management